Appendix B - 107

APPENDIX B

SAMPLE FORMS

Contact or Encounter Form — 109

Form for Items Distributed — 110

Form for Items Received — 111

��Appendix B: Sample Forms - 109

CONTACT OR ENCOUNTER FORM

ONTACT

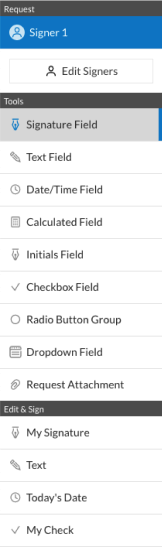

Design a brief form, similar to the one shown here, to keep track of all contacts related to program

activities between program personnel and people outside the program. Tailor the type of contact

and services provided sections to the activities conducted by your program. For example, an enforcement program might have a list of reasons for stopping drivers or a list of types of sobriety checks.

If a category labeled Other is used frequently, you need to add new categories to accommodate

items that are being assigned to Other. Below is a general contact form that can be adapted for any

program to prevent unintentional injury.

CONTACT

OR

Contact/Encounter No.:

ENCOUNTER FORM

[Number Consecutively]

Date:

Type of Contact

Other Assistance Provided

Telephone

t

Information Only

t

Personal Meeting

t

Training

t

Electronic Mail

t

Consultation

t

Other (Specify)

t

Counseling

t

Curriculum or Materials

t

Other (Specify)

t

Who Initiated Contact

Program Staff

t

Program Participant

t

Other (Specify)

t

Program Staff Who

Participated in Contact

Purpose of Contact

Request for Financial Support

t

Request for Legislative Support

t

Request for Other Support

t

Request for Volunteer Services

t

Offer of Volunteer Services

t

Request to Participate in Program

t

Request for Information

t

Request for Incentive

t

Other (Specify)

t

�110 - Appendix B: Sample Forms

FORM FOR ITEMS DISTRIBUTED

A distribution form should be completed for each unit (i.e., person or household in the target

population) to whom items (e.g., infant safety seats) were distributed. The purpose is to document

the number of items distributed and the characteristics of the people who received the item(s).

Below is a generic form that can be adapted to suit your program.

ITEMS DISTRIBUTED

Distribution No.:

[Number Consecutively]

Date:

List of Items Distributed:

Number of item(s) distributed:

Location where item(s) were distributed:

To

Item(s) Distributed To Households

Number of people in household: ___________________

Age and sex of people in household: [Divide by appropriate intervals, say 5- or 10-year intervals]

10 years or under

Females: _______

Males: _______

11–20 years

Females: _______

Males: _______

21–30 years

Females: _______

Males: _______

31–40 years

Females: _______

Males: _______

41–50 years

Females: _______

Males: _______

51–60 years

Females: _______

Males: _______

61–70 years

Females: _______

Males: _______

71 years or older

Females: _______

Males: _______

To

Item(s) Distributed To Individuals

Age and sex of individual person: [Divide by appropriate intervals, say 5- or 10-year intervals]

10 years or under

Female: t

Male: t

11–20 years

Female: t

Male: t

21–30 years

Female: t

Male: t

31–40 years

Female: t

Male: t

41–50 years

Female: t

Male: t

51–60 years

Female: t

Male: t

61–70 years

Female: t

Male: t

71 years or older

Female: t

Male: t

Other Assistance Provided

Installation t

Training t

Counseling t

Referral t

�Appendix B: Sample Forms - 111

FORM FOR ITEMS RECEIVED

Keep track of all items given to you by members of the target population. An example of items

received would be car safety seats returned to a loan program. The purpose is to document the

number of items received and the characteristics of the people who hand in the items. Below is a

generic form that can be adapted to suit your program.

ITEMS RECEIVED

Receipt No.:

[Number Consecutively]

Date:

List of Items Received:

Number of item(s) received:

Location where item(s) were received:

From

Item(s) Received From Households

Number of people in household: ___________________

Age and sex of people in household: [Divide by appropriate intervals, say 5- or 10-year intervals]

10 years or under

Females: _______

Males: _______

11–20 years

Females: _______

Males: _______

21–30 years

Females: _______

Males: _______

31–40 years

Females: _______

Males: _______

41–50 years

Females: _______

Males: _______

51–60 years

Females: _______

Males: _______

61–70 years

Females: _______

Males: _______

71 years or older

Females: _______

Males: _______

From

Item(s) Received From Individuals

Age and sex of individual person: [Divide by appropriate intervals, say 5- or 10-year intervals]

10 years or under

Female: t

Male: t

11–20 years

Female: t

Male: t

21–30 years

Female: t

Male: t

31–40 years

Female: t

Male: t

41–50 years

Female: t

Male: t

51–60 years

Female: t

Male: t

61–70 years

Female: t

Male: t

71 years or older

Female: t

Male: t

Other Assistance Provided

Counseling t

Referral t

Training t

��Appendix C - 113

APPENDIX C

CHECKLIST OF TASKS

For Programs to Prevent Unintentional Injury

Program Development

As soon as you or someone in your organization has the

idea for a program to prevent unintentional injury, begin

evaluation.

1. Investigate to make sure an effective program similar

to the one you envision does not already exist in your

community.

2. If a similar program does exist and if it is fully meeting

the needs of your proposed target population, modify

your ideas for the program so that you can fill a need

that is not being met.

3. Decide where you will seek financial support.

®

Find out which federal, state, or local government

agencies give grants for the type of program you

envision.

®

Find out which businesses and community groups

are likely to support your goals and provide funds

to achieve them.

4. Decide where you will seek nonfinancial support.

®

Find out which federal, state, or local government

agencies provide technical assistance for the type of

program you envision.

®

Find out which businesses and community groups

support your goals and are likely to provide technical

assistance, staff, or other nonfinancial support.

5. Develop an outline of a plan for your injury-prevention

program. Include in the outline the methods you will use

to provide the program service to participants and the

methods you will use to evaluate your program’s impact

and outcome.

�114 - Appendix C: Checklist of Tasks

6. Evaluate the outline. For example, conduct personal

interviews or focus groups with a small number of the

people you will try to reach with your injury-prevention

program. Consult people who have experience with

programs similar to the one you envision, and ask them

to review your plan. Modify your plan on the basis of

evaluation results.

7. Develop a plan to enlist financial and nonfinancial support from all the agencies, businesses, and community

organizations you have decided are likely sources of

support. Use the outline of your plan for the injuryprevention program to demonstrate your commitment,

expertise, and research.

8. Evaluate the plan for obtaining support. For example,

conduct personal interviews with business leaders in

your community. Modify your plan on the basis of

evaluation results.

9. Put your plan for obtaining support into action.

10. Keep track of all contacts you make with potential

supporters.

11. If unexpected problems arise while you are seeking

support, re-evaluate your plan or the aspect of your plan

that seems to be the source of the problem. For example,

if businesses are contributing much less than you had

good reason to expect, then seek feedback from

businesses that are contributing and those that are not.

Or if you did not receive grant funds for which you

believed you were qualified, contact the funding agency

to find out why your proposal was rejected. Modify

your plan according to your re-evaluation results, and

continue seeking support.

12. When you have enough support for your program,

expand on the outline of your plan for the injuryprevention program. Include in the design a mechanism

for evaluating the program’s impact and outcome.

13. Evaluate your program’s procedures, materials, and

activities. For example, conduct focus groups within

your target population. Modify the plan on the basis

of evaluation results.

14. Develop forms to keep track of program participants,

program supporters, and all contacts with participants,

supporters, or other people outside the program.

15. Measure the target population’s knowledge, attitudes,

beliefs, and behaviors that relate to your program goals.

The results are your baseline measurements.

�Appendix C: Checklist of Tasks - 115

Program Operation

1. Put your program into operation.

®

Track all program-related contacts (participants,

supporters, or others). Track all items either

distributed to or collected from participants.

®

As soon as the program has completed its first

encounter with the target population, assess any

changes in program participants’ knowledge,

attitudes, beliefs, and (if appropriate) behaviors.

2. Continue tracking and assessing program-related

changes in participants throughout the life of the

program. Keep meticulous records.

3. If unexpected problems arise while the program is in

operation, re-evaluate (using qualitative methods) to

find the cause and solution. For example, your records

might show that not as many people as expected are

responding to your program’s message, or your assessment of program participants might show that their

knowledge is not increasing. Modify the program on

the basis of evaluation results.

4. Evaluate ongoing programs (e.g., classes on fire safety

given each year to third graders) at suitable intervals to

see how well the program is meeting its goal of reducing

injury-related morbidity and mortality.

Program Completion

1. Use the data you have collected throughout the program

to evaluate how well the program met its goals: to

increase behaviors that prevent unintentional injury

and, consequently, to reduce the rate of injuries and

injury-related deaths.

2. Use the results of this evaluation to justify continued

funding and support for your program.

3. If appropriate, publish the results of your program in

a scientific journal.

��Appendix D - 117

APPENDIX D

BIBLIOGRAPHY

Evaluation in General

Capwell EM, Butterfoss F Francisco VT “Why Evaluate?”

,

.

Health Promotion Practice, Vol. 1(1)15–20; January 1999.

CDC. “ Framework for Assessing the Effectiveness of Disease

A

and Injury Prevention.” MMWR Recommendations and

Reports, Vol. 41 (RR03):1–12; March 27, 1992.

CDC. Evaluating Community Efforts to Prevent CardioVascular Diseases: Work-Group on Health Promotion

and Community Development. Atlanta, GA: National

Center for Chronic Disease Prevention and Health

Promotion; 1995.

CDC. “Framework for Program Evaluation in Public Health.”

MMWR Recommendations and Reports, Vol. 48 (RR11):

1–40; September 17, 1999.

CDC. Measuring Violence-Related Attitudes, Beliefs, and

Behaviors Among Youths: A Compendium of Assessment

Tools. Atlanta, GA: National Center for Injury Prevention

and Control, Publication No. 099-5626; 1998.

Fulbright-Anderson K, Kubisch AC, Connell JP “New

.

Approaches to Evaluating Community Initiatives.”

Vol. 2 - Theory, Measurement and Analysis. Queenstown,

MD:The Aspen Institute; 1998.

Hawe P Degeling D, Hall J. Evaluating Health Promotion: A

,

Health Worker’s Guide. Sydney: MacLennan and Petty

Pty, Limited; 1990.

Israel BA, Cummings M, Dignan MB, et al. “Evaluation of

Health Education Programs: Current Assessment and

Future Directions.” Health Education Quarterly, Vol. 22(3):

364–389; 1995.

�118 - Appendix D: Bibliography

McKenzie JF, Smeltzer JL. Planning, Implementing and Evaluating Health Promotion Programs (2nd Edition). Needham

Heights, MA: Allyn and Bacon; 1997.

Rivera F Sleet D, Acree K, et al. “Chapter 4: Program Design

,

and Evaluation.” In: National Committee on Injury

Prevention and Control (Eds.). Injury Prevention: Meeting

the Challenge. Supplement to American Journal of Preventive

Medicine, Vol. 5, No. 3. New York: Oxford University

Press; 1989.

Rootman I, Goodstadt M, Hyndman B, et al. (Eds).

Evaluation in Health Promotion: Principles and Perspectives.

Copenhagen, Denmark: World Health Organization,

Euro; 1999 (in press).

Rossi PH, Freeman HE. Evaluation: A Systematic Approach.

Newbury Park, CA: Sage; 1993.

Udinsky BF Osterlind SJ, Lynch SW Evaluation Resource

,

.

Handbook: Gathering, Analyzing, Reporting Data. San Diego:

Edits; 1981.

Wye CG, Hatry HP Timely, Low-Cost Evaluation in the Public

.

Sector. San Francisco: Josey-Bass; 1988.

Experimental and Quasi-Experimental Design

Campbell DT, Stanley JC. Experimental and QuasiExperimental Designs for Research. Boston:

Houghton Mifflin; 1963.

Psychological Testing

Testing

Anastasi A. Psychological Testing. 6th Edition. New York:

MacMillan; 1988.

Questionnaires and Questionnaire Design

Bogozzi RP “Measurement in Marketing Research: Basic

.

Principles of Questionnaire Design.” In: Bagozzi RP,

Editor. Principles of Marketing Research. Cambridge, MA:

Blackwell Business; 1994.

Educational Testing Service. “

Attitude Tests.” In: The ETS Test

Collection Catalog, Vol. 5. Phoenix, AZ: Oryx Press; 1991.

�Appendix D: Bibliography - 119

Erdos PL. Professional Mail Surveys. New York:

McGraw-Hill; 1970.

Hayes BE. Measuring Customer Satisfaction: Development

and Use of Questionnaires. Milwaukee: ASQC Quality

Press; 1992.

Kanda N. Group Interviews and Questionnaire Surveys.

Qual Control 1994; August:77–85.

Nogami GY. Eight Points for More Useful Surveys. Qual

Progress 1996; October:93–6.

Oppenheim AN. Questionnaire Design, Interviewing and

Attitude Measurement. London: Pinter; 1992.

Sudman S, Bradburn NM. Asking Questions. San Francisco:

Jossey-Bass; 1982.

Zimmerman DE, Muraski ML. The Elements of Information

Gathering: A Guide for Technical Communicators,

Scientists and Engineers. Phoenix, AZ: Oryx Press; 1995.

Risk for Morbidity or Mortality

Occupant Restraints in Motor Vehicles

Vehicles

Boehly WA, Lombardo IV “Safety Consequences of the Shift

.

to Small Cars in the 1980's.” In: National Highway Traffic

Safety Administration: Small Car Safety in the 1980's.

Washington, DC: US Department of Transportation; 1980.

Bicycle Helmets

Thompson DC, Thompson RS, Rivara FP Wolf ME.

,

“Case-Control Study of the Effectiveness of Bicycle Safety

Helmets in Preventing Facial Injury.” Am J Public Health

1990; 80:1471–4.

Thompson RS, Rivara FP Thompson DC. “Case-Control Study

,

of the Effectiveness of Bicycle Safety Helmets,” New Engl J

Med 1989; 320:1361–7.

�120 - Appendix D: Bibliography

Smoke Detectors

Hall JR. “The U.S. Experience with Smoke Detectors.” NFPA J

1994; Sept/Oct:36–46.

Mallonee S, Istre GR, Rosenberg M, Reddish-Douglas M,

Jordan F, Silverstein P et al. “Surveillance and Prevention

,

of Residential Fires.” New Engl J Med 1996; 335:27–31.

Runyan CW Shrikant IB, Linzer MA, Sacks JJ, Butts J. “Risk

,

Factors for Fatal Residential Fires.” New Engl J Med 1992;

327:859–63.

Sampling Methods and Survey Research

Fowler FJ. “Survey Research Methods.” In: Applied Social

Research Methods Series, Vol. 1. Newbury Park, CA:

Sage; 1993.

Green LW Lewis FM. Measurement and Evaluation in

,

Health Education and Health Promotion. Palo Alto, CA:

Mayfield; 1986.

Rossi PH, Wright JD, Anderson AB. Handbook of Survey

Research. San Diego: Academic Press; 1983.

�Appendix E - 121

APPENDIX E

GLOSSARY

Attitudes: People’s biases, inclinations, or tendencies that

influence their response to situations, activities, people,

or program goals.

Baseline information: Data gathered on the target population

before an injury-prevention program begins.

Closed-ended questions: Questions that allow respondents

to choose only from a list of possible answers. (Compare

Open-ended questions.)

Comparison group: (see Control group)

Contact: Any personal interaction between program staff and

a person or household in the target population (sometimes

called encounter). Also the person or household with

whom program staff interacted.

Control group (or comparison group): A group whose

characteristics are as similar as possible to those of the

intervention group. To evaluate program effects,

evaluators compare differences in changes between

the two groups. See also Intervention group.

Encounter (contact): In evaluation, any personal interaction

between a program and a person, household, or group of

people in the target population.

Experimental designs: In evaluation, methods that involve

randomly assigning people in the target population to

one of two or more groups in order to eliminate the effects

of history and maturation. The program’s effects are

measured by comparing the change in one group or set of

groups with the change in another group or set of groups.

Focus group: A qualitative method of evaluating program

materials, plans, and results. A facilitator moderates a

discussion among four to eight people, allowing them

to talk freely on the subject of interest.

�122 - Appendix E: Glossary

Formative evaluation: Research conducted (usually while the

program is being developed) on a program’s proposed

materials, procedures, and methods.

History: The knowledge, skills, or other attributes that people

have with regard to the goals of an injury-prevention

program before the program begins.

Impact evaluation: Research to determine how well a

program is meeting its intermediate goals of changes

in people’s knowledge, attitudes, and beliefs.

Instrument: The tool used to gather information on

people’s knowledge, attitudes, beliefs, or behavior

(e.g., a questionnaire).

Intervention: The method, device, or process used to prevent

an undesirable outcome.

Intervention group: The group in an experimental study or

evaluation who is to receive the intervention. See also

Control group.

Item: One question or statement on an instrument used to

measure knowledge, attitudes, beliefs, or behaviors.

Maturation: The knowledge, skills, or other attributes that

people gain with regard to the goals of an injuryprevention program while the program is going on, but

which are not due to program activities.

Morbidity: Any deviation from a state of well-being, either

physiological or psychological; any mental or physical

illness or injury.

Outcome evaluation: Research to determine how well

programs succeeded in achieving their ultimate

objective of reducing morbidity and mortality.

Open-ended questions: Questions that allow respondents to

answer freely in their own words. (Compare Closed-ended

questions.)

Pilot test: A small-scale trial conducted before a full-scale

program begins to see if the planned methods,

procedures, activities, and materials will work.

Placebo: A service, activity, or item that is similar to the

intervention service, activity, or item but without the

intervention characteristic that is being evaluated.

Prevalence: The amount of a factor of interest

(e.g., knowledge or head injury) that is present

in a specified population at a specified time.

�Appendix E: Glossary - 123

Probe: A method of soliciting more information about an

issue than respondents gave in their first response to

questions.

Process evaluation: Research to determine how well a

program is operating. Includes assessments of whether

the program and its materials are reaching the target

population and, if so, in what quantity.

Qualitative methods: Ways of collecting descriptive data on

the knowledge, attitudes, beliefs, and behaviors of the

target population. In general, information gathered using

qualitative methods is not given a numerical value.

Quality assurance: A system to ensure that all aspects of a

program will be of the highest possible caliber.

Quantitative methods: Ways of collecting numerical data on

the target population. Use quantitative data to draw

conclusions about the target population.

Quasi-experimental design: In evaluation, methods that do

not involve randomly assigning members of the target

population either to an intervention or to a comparison

group but which, nevertheless, reduce the effects of

history and maturation. Evaluators have less control over

factors that affect the comparison group than they do

with experimental designs.

Randomization: Assigning individuals by chance (using a

predetermined method) to groups that will either receive

the injury-prevention intervention or not receive it. It is

used for experimental-design programs. The predetermined method is usually based on a table of random

numbers or a computer-generated list.

Rate: A measurement of how frequently an event occurs

among people in a certain population at a point in time

or during a specified period of time.

Reach: The number of people or households who receive the

program’s message or intervention.

Readability: The level of reading skill required to be able to

understand written materials.

Sample: A subset of people in a particular population.

Sampling frame: Complete list of all people or households in

the target population.

Schematic: The order (in symbols) in which events occur

during an experimental or quasi-experimental study.

�124 - Appendix E: Glossary

Survey: A quantitative (nonexperimental) method of

collecting information at one point in time on the target

population. Surveys may be conducted by interview

(in person or by telephone) or by questionnaire.

Survey instrument: (see Instrument).

Survey item: (see Item)

Target population: The people or households the program

intends to serve.

Unit: One person or household in the target population.

�COMMENT FORM

apply.

1. Which sections of this book did you read? [Check all answers that apply.]

Introduction

How This Primer Is Organized

t

Section 1: General Information

t

Section 2: Stages of Evaluation

t

Section 3: Methods of Evaluation

Appendix A: Examples of Questions t

to Ask, Events to Observe, and

Who or What to Count

t

t

Appendix B: Sample Forms

Appendix C: Checklist of Tasks

t

Appendix E: Glossary

2. What is your opinion of this book?

t

Appendix D: Bibliography

Useful

t

t

Somewhat

Useful

Not

Useful

Did Not

Read

t

t

t

t

What is your opinion of each section

of this book?

Introduction

t

t

t

t

How This Primer Is Organized

t

t

t

t

Section 1: General Information

t

t

t

t

Section 2: Stages of Evaluation

t

t

t

t

Section 3: Methods of Evaluation

t

t

t

t

Appendix A: Examples of Questions

to Ask, Events to Observe, and

Who or What to Count

t

t

t

t

Appendix B: Sample Forms

t

t

t

t

Appendix C: Checklist of Tasks

t

t

t

t

Appendix D: Bibliography

t

t

t

t

Appendix E: Glossary

t

t

t

t

apply.

3. How did you use this book? [Check all answers that apply.]

Personal reference t

Staff instruction t

Library resource t

Other (Specify) _________

4. Check your job category

Health Professional t

Other Professional t

Other (Specify) _____________________

Executive/Manager t

Educator t

�employer’s

5. Check your employer ’s category

Government t

Academia t

Nonprofit Organization t

Private Corporation t

Other (Specify) _______________

6. Do you have suggestions on how to improve this book?

--------------------------------------------------------------------------------------------------------------------------------

Fold

No postage necessary - Fold along dotted lines - Seal with tape

-------------------------------------------------------------------------------------------------------------------------------DEPARTMENT OF

HEALTH AND HUMAN SERVICES

NO POSTAGE

NECESSARY

IF MAILED

IN THE

UNITED STATES

Public Health Service

Centers for Disease Control

and Prevention (CDC)

Atlanta, Georgia 30333

Official Business

Penalty for Private Use, $300

Return After Five Days

Return Service Requested

BUSINESS REPLY MAIL

FIRST CLASS MAIL

PERMIT NO. 99110

ATLANTA, GA 30333

Postage Will Be Paid by Department of Health and Human Services

To:

Your Program’s Worth

To: Demonstrating Your Program’s Worth

(MS-K63)

NCIPC - Division of Unintentional Injury (MS-K63)

4770 Buford Highway NE

Atlanta, GA 30341-3724

�