This article appeared in a journal published by Elsevier. The attached

copy is furnished to the author for internal non-commercial research

and education use, including for instruction at the authors institution

and sharing with colleagues.

Other uses, including reproduction and distribution, or selling or

licensing copies, or posting to personal, institutional or third party

websites are prohibited.

In most cases authors are permitted to post their version of the

article (e.g. in Word or Tex form) to their personal website or

institutional repository. Authors requiring further information

regarding Elsevier’s archiving and manuscript policies are

encouraged to visit:

http://www.elsevier.com/copyright

�Author's personal copy

Computers and Electronics in Agriculture 69 (2009) 12–18

Contents lists available at ScienceDirect

Computers and Electronics in Agriculture

journal homepage: www.elsevier.com/locate/compag

Hardware-based image processing for high-speed inspection of grains

Tom Pearson ∗

USDA-ARS-GMPRC, 1515 College Ave, Manhattan, KS 66502, United States

a r t i c l e

i n f o

Article history:

Received 3 February 2009

Received in revised form 10 June 2009

Accepted 12 June 2009

Keywords:

FPGA

CMOS

Popcorn

Wheat

Corn

Smart camera

Machine vision

a b s t r a c t

A high-speed, low-cost, image-based sorting device was developed to detect and separate grains

having slight color differences or small defects. The device directly combines a complementary

metal–oxide–semiconductor (CMOS) color image sensor with a field-programmable gate array (FPGA)

which was programmed to execute image processing in real-time, without the need of an external computer. Spatial resolution of the imaging system is approximately 16 pixels/mm. The system includes three

image sensor/FPGA combinations placed around the perimeter of a single-file stream of kernels, so that

most of the surface of each kernel is be inspected. A vibratory feeder feeds kernels onto an inclined chute

that kernels slide down in a single-file manner. Kernels are imaged immediately after dropping off the end

of the chute and are diverted by activating an air valve. The system has a throughput rate of approximately

75 kernels/s per channel which is much higher than previously developed image inspection systems. This

throughput rate corresponds to an inspection rate of approximately 8 kg/h of wheat and 40 kg/h of popcorn. The system was initially developed to separate white wheat from red wheat, and to remove popcorn

having blue-eye damage, which is indicated by a small blue discoloration in the germ of a popcorn kernel.

Testing of the system resulted in accuracies of 88% for red wheat and 91% for white wheat. For popcorn,

the system achieved 74% accuracy when removing popcorn with blue-eye damage and 91% accuracy at

recognizing good popcorn. The sorter should find uses for removing other defects found in grain, such

as insect-damaged grain, scab-damaged wheat, and bunted wheat. Parts for the system cost less than

$2000, suggesting that it may be economical to run several systems in parallel to keep up with processing

plant rates.

Published by Elsevier B.V.

1. Introduction

Field-programmable gate arrays (FPGAs) are semiconductor

devices that are comprised of interconnected logic elements, memory, and digital signal processing hardware on a single chip. The

configuration of the interconnections, and therefore the function

of the device, is determined by compiled programs loaded onto the

chip. These devices do not need any operating systems to function

and can handle data throughputs over 200 MB/s, which is at least

an order of magnitude higher than what can be achieved by microcontrollers. FPGAs are currently used in a large variety of hardware

where low cost and high data throughput rates are required, such

as digital cameras, cell phones, speech recognition, and image processing (Maxfield, 2004). Many traditional image frame-grabber

boards use FPGAs to pre-process incoming images and direct the

data to other hardware for further processing and storage. Advantages of FPGAs over micro-controllers and personal computers for

image processing functions are that they can perform many compu-

∗ Tel.: +1 785 776 2729; fax: +1 785 537 5550.

E-mail address: thomas.pearson@ars.usda.gov.

0168-1699/$ – see front matter. Published by Elsevier B.V.

doi:10.1016/j.compag.2009.06.007

tations in parallel, and that they execute all commands in hardware

so they are ideal for real-time systems. Additionally, FPGAs are able

to perform computation on images as they are transferred to the

device, before the complete image has been loaded. In contrast,

processing of images on a PC usually requires the images to be

completely loaded into memory, then read out from memory and

processed. This causes a delay in processing the image which can be

significant for high-speed sorting operations (Pearson et al., 2008)

Charge-coupled devices (CCD) have traditionally been the most

commonly used image sensors. While these devices produce

high-quality images, they require significant support electronics

(Yamada, 2006). However, this need for elaborate support electronics has recently been alleviated by the now-widespread production

of complementary metal–oxide–semiconductor (CMOS) image

sensors (Takayanagi, 2006). CMOS sensors are less expensive than

equivalent CCD sensors, and the required support electronics are

integrated onto the sensor chip. The integration of amplifiers,

analog-to-digital converters, and signal processing capabilities onto

the CMOS image sensors drastically simplifies camera design and

further lowers cost. This makes interfacing CMOS chips with

user-programmable processing hardware, such as FPGAs, relatively

simple.

�Author's personal copy

T. Pearson / Computers and Electronics in Agriculture 69 (2009) 12–18

A direct CMOS-FPGA link is now widely used in many cellular

phones and digital cameras (Maxfield, 2004). The FPGAs in these

systems are used as “glue logic” between the image sensor, microprocessor, and memory. While FPGAs may perform some digital

image processing such as white balancing or exposure compensation, they do not perform image feature extraction or classification.

“Smart Camera” is an industry term for a camera that has an image

sensor and processor that are directly linked, and that has capability for an end user to do some programming of the processor.

These devices are gaining acceptance for automated inspection of

machine parts and self-guided robots. However, currently these

cameras are still very expensive, use digital signal processors rather

than FPGA’s, and an end user usually has limited ability to modify the imaging software. Thus, these devices have not yet found

uses for the inspection of agricultural products since agricultural

applications typically deal with irregular product sizes, and specific

separation requirements. FPGA’s have gained computational power

by integrating more logic elements, fast on-chip memory, and digital signal processing capabilities such as hardware to compute

convolutions. These enhancements make FPGA’s more cost effective than DSP’s for some signal processing applications (Maxfield,

2004). With the price of CMOS imaging chips and FPGAs already

being very low, and with the simplicity of linking these two technologies together, it is possible to assemble a CMOS image sensor

and FPGA together to make a “smart camera” for less than $100,

with a user having full access to program the camera, extract image

features, and make classifications for sorting operations.

Most commercial high-speed sorting machines used for agricultural products either have no spatial resolution or do not fully

utilize the spatial resolution produced by their sensors. For those

sorters with some spatial resolution, the only image processing performed is thresholding and pixel counting. These sorters are useful

for detecting larger, high contrast, spots on products. Sorters having no spatial resolution may have multiple sensors coupled by

beam splitters to measure light at two or three specific spectral

bands. These types of sorters are effective at distinguishing products with large color or chemical constituent differences by using

near infrared bands. Some newer sorters might have two or more

sensor arrays coupled with beam splitters to get very low spatial

resolution images at two or three spectral bands. However, for many

products, certain defects are difficult to detect and remove using the

most advanced, currently available, commercial sorters. Products

with slight color differences or small, low contrast spots or blemishes are difficult or impossible to sort with commercial sorters.

Shriveled and Fusarium Head Blight (scab) damaged wheat kernels

are a case in point. The efficacy of using a limited spatial resolution (∼0.5 mm) commercial dual-band (one near infrared (NIR), one

visible) sorter for removal of scab-damaged kernels has been studied (Delwiche et al., 2005). Only 50% of the scab-damaged kernels

were removed, while about 5% of the undamaged kernels were also

rejected. Preliminary studies have shown that the use of simple

histograms of low resolution color images enables scab-damaged

kernels to be distinguished from sound kernels with over 90% accuracy (Pearson, 2006). This is an application that an FPGA linked to

an image sensor could perform very economically.

There have been many developments relevant to the inspection

of agricultural products using imaging, such as the inspection of

apples (Bennedsen and Peterson, 2004), rice Kumar and Bal, 2007),

and wheat (Wang et al., 2004). However, few of these developments

are able to be implemented as high-speed sorting applications at

an economically feasible cost.

Popcorn infected with blue-eye damage and separation of red

and white wheat classes are two sorting applications that commercial sorters are not able to adequately separate and current

imaging technology is too slow and expensive for viable implementation. Blue-eye damage in popcorn is a re-occurring problem due

13

to delayed drying of the kernels after harvest. Since popcorn quality

is very sensitive to moisture, the corn cannot be dried as quickly as

field corn. However, this can cause infection by fungi that lead to

blue-eye damage, which appears as a small, dark blue spot on the

germ. The problem is important to the popcorn industry as these

kernels can have a poor flavor when popped. The visual damage to

the kernels is small and therefore not detectable by any commercially available sorting equipment. Automated separation of red and

white wheat is needed by wheat breeders developing white wheat

varieties that have the baking properties of red wheat. Upon harvest

of field ploat with both red and white wheat, the white wheat needs

to be separated from red kernels so that it can be propagated again.

Commercial color sorters can distinguish red and white wheat with

approximately 80% accuracy after several passes through the sorter

(Pasikatan and Dowell, 2003), which may not always be accurate

enough for some breeding lines with small amounts of white wheat.

Additionally, the cost of these sorters is high and they are designed

to handle large volumes rather than the smaller samples of 1 kg or

less that breeders generally work with.

The purpose of this research was to directly link a low-cost CMOS

image sensor with a low-cost FPGA, and to program the FPGA to

extract simple features and classify objects in the image. The test

objects were popcorn with and without blue-eye damage and red

and white wheat classes.

2. Materials and methods

2.1. Image sensor—FPGA design

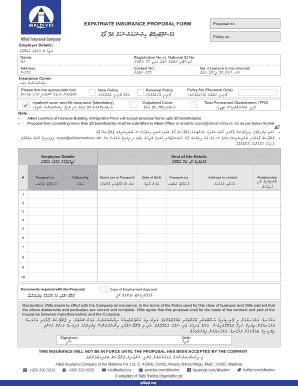

A CMOS image sensor (KAC-9628, Eastman Kodak Company,

Rochester, NY) was mounted onto a custom-designed printed

circuit board with all support electronics recommended by the

manufacturer. Fig. 1 displays a schematic of the image sensor,

support electronics, and interface to the FPGA. The FPGA, with

its necessary support electronics, was purchased pre-mounted

onto a circuit board of its own (Pluto-II, KNJN-LLC-fpga4fun.com,

Freemont, CA). The FPGA board has an electronically erasable programmable read only memory (EEPROM), or otherwise known as

non-volatile memory, that stores and loads the program the FPGA

is to run upon powering up. In addition, the board also has a serial

communications port for loading new programs and transferring

data, a 25 MHz clock, an LED, a 3.3 V voltage regulator for powering

the FPGA and connected electronics, and a power socket to supply external power. The FPGA used on the Pluto-II board is made

by Altera Corporation (Model #Cyclone EP1C3) and the free Altera

Quartus II web edition version 7.2 was used to develop and compile

programs for the FPGA. The FPGA board was connected directly to

the image sensor board via header pins. Fig. 2 displays images of

the image board and attached FPGA board.

The circuit board built for the image sensor and interface for the

FPGA board was a four-layer board to simplify grounding and power

distribution and to help shield electrical noise. A 470 F electrolytic

capacitor was used on the image board to stabilize the power from

low-frequency variation while several 0.1 F capacitors were used

on the image board near the image sensor to dampen higher frequency power fluctuations.

The FPGA board was also connected to a rotary hex DIP switch

(94HAB16RAT, Grayhill, Inc., LaGrange, IL) which sends a number

between 0 and 15 to the FPGA that adjusts a sensitivity threshold for classification (discussed later). For diverting grain, the FPGA

outputs a digital signal which triggers a solid state relay (D0061B,

Crydom, San Diego, CA) and fires an electronic solenoid-activated

air valve (36A–AAA–JDBA–1BA, Mac Valves, Inc., Wixom, MI). The

air valve sends a burst of air for 5 ms to a wide air nozzle (31875K41,

McMaster-Carr Co., Elmhurst, IL) to ensure proper rejection.

�Author's personal copy

14

T. Pearson / Computers and Electronics in Agriculture 69 (2009) 12–18

Fig. 1. Schematic of image sensor—FPGA interface with supporting electronics for

the image sensor. Not shown are the EEPROM, serial interface, 25 MHz clock, voltage

regulator, and support electronics for the FPGA which are supplied on the Pluto-II

board.

All non-default image sensor settings were sent from the FPGA

to the image sensor using an inter-integrated-circuit (I2 C) serial bus

communications protocol (Phillips Semiconductor, 2000) as specified by the image sensor manufacturer. The default image sensor

settings that were changed by the FPGA were the pixel clock division (from four to two), the region of interest, and the analog gain.

The 25 MHz clock on the FPGA circuit board was wired to the main

clock input of the image sensor, so the pixel clock rate was 12.5 MHz.

The region of interest was limited to the two lines in the center

of the image sensor. This essentially made the two-dimensional

sensor work as a color linescan sensor. The image sensor used has

either a red, green or blue color filter over every pixel arranged in a

Bayer pattern, typical of most two-dimensional image sensors. One

line consists of red and green pixels and the next line consists of

green and blue pixels. Most two-dimensional color images are constructed by interpolating the colored pixels so that each pixel would

appear to have a red, green, and blue value. However, this was not

done in this application in order to reduce computations. Thus, the

red and blue image data was one quarter the full scale pixel resolution and the green image data was one half the full scale raw pixel

resolution. The analog image gain was set to a level of 128, which is

the middle of the amplification range on this sensor. The 12.5 MHz

clock rate produced images of popcorn and wheat kernels of the

correct aspect ratio when the imaged lines were reconstructed to

form a two-dimensional image.

The image sensor continuously sent pixel data to the FPGA.

Image acquisition was initiated at the first pixel of the red-green

image sensor line after any pixel data above an intensity of 15 was

detected by the FPGA. Self-triggering of image acquisition simplifies the design and is made possible by running the image sensor in

linescan mode with direct connection to the FPGA. After triggering,

the FPGA would acquire 125 complete lines, which resulted in an

image 640 pixels wide by 125 pixels tall. Thus, image acquisition

time was approximately 6.4 ms. Both popcorn and wheat kernels

would range from approximately 100 to 120 lines tall in the resulting images. Images were not color interpolated by the FPGA, in order

to reduce complexity of the FPGA program. The red, green and blue

pixels were simply processed separately. Images from the image

sensor were also captured by a logic analyzer (LAP-16128U, Zeroplus, Chung Ho City, Taiwan) and stored on a personal computer

for inspection of lighting and focus, and for off-line development of

image processing and classification schemes.

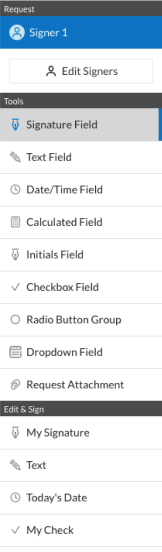

Three FPGA/image sensor cameras were mounted to a steel circular ring 30.5 cm in diameter. The cameras were placed 120◦ apart

on the ring so that almost the entire surface of kernels could be

inspected as they dropped off the end of the chute. Preliminary

experiments showed that a small spot on a popcorn kernel made

by an ink pen was visible approximately 99% of the time. Conversely, when only two cameras were used in an opposed manner,

the spot was visible in only 70% of the images. Thus, a three camera system was used as shown in Fig. 3 to ensure that the system

would be able to detect small spots or blemishes, such as blue-eye

damaged popcorn. Illumination for each camera came from two

narrow-spot-beam 20 W halogen reflector bulbs (#1003116, Ushio

America, Cypress, CA) for a total of six bulbs for the entire system. The bulbs were placed such that they did not point directly

Fig. 2. Photo of the image sensor and FPGA boards connected together. The image sensor and lens are on the opposite side from the FPGA board.

�Author's personal copy

T. Pearson / Computers and Electronics in Agriculture 69 (2009) 12–18

15

2. At least three pixels between pixels 10 and 14 of the 24 pixel set

must have an intensity level less than a preset threshold level.

3. A rise in red pixel intensity of at least 15 intensity levels across

the width of six pixels occurring in the last 10 pixels of the 24

pixel set.

Fig. 3. End view of the sorter sensing system showing all three cameras, six light

bulbs, air nozzle, and chute.

at cameras on the opposite side of the ring. The lenses used on

each camera were miniature type with 12 mm × 0.5 mm thread and

16 mm focal length, f1.8 (V-4316-2.0, Marshall Electronics, Inc., El

Segundo, CA). Lenses were mounted to the image sensor circuit

board with a threaded lens mount (LH-4, Marshall Electronics, Inc.,

El Segundo, CA). Aluminum tubes, 4 cm long, were press fit around

each lens to block stray light from adjacent bulbs that would otherwise affect the images. The feeder chute was aligned in the center

of the ring so that all cameras had similar spatial resolution. Fig. 3

displays an end view of the complete sorting system.

2.2. Signal processing and classification—popcorn

Fig. 4 displays images of a popcorn kernel with blue-eye damage

as well as an undamaged kernel (these are high-resolution images,

not taken by the image sensor/FPGA camera used for sorting). Below

each image is a gray image displaying only the red pixels. It can

be seen that the blue-eye damage (darker spot on the germ) has

slightly better contrast in the mono-chrome red pixel image than

in the color image. Furthermore, the brightness of the endosperm is

closer to that of the germ in the red pixel image. Below the images of

the red pixels, an intensity profile plot across each kernel is shown.

Blue-eye damage is approximately 15 pixels wide by 40 pixels long

in images taken by cameras used on the sorting machine. The chute

used to feed the kernels had a round bottom groove approximately

9.0 mm wide so that kernels would usually be oriented so that their

long axis was parallel with their direction of travel. This orientation

caused the blue-eye damage to appear from top to bottom in the

images. Blue-eye cannot be accurately identified by simple image

thresholding, as the spot is very small and dark areas on the sides of

the kernels have similar intensities to blue-eye areas. Profile plots

across blue-eye damaged portions of a kernel appear as a deep valley having steep slopes from a high intensity level of the white germ

to low intensity levels of the darker blue-eye damaged area.

Upon receipt of new red pixel data, the FPGA was programmed

to place it into a memory buffer of 32 pixels in length. Pixel intensity

slopes were computed with a six pixel gap. Blue-eye damage was

detected when a set of pixels had a sharp drop in intensity, followed

by at least three pixels having an intensity below a threshold level,

then a sharp rise in pixel intensity, all happening within 24 pixels

in one line. Specifically, a set of 24 pixels were considered to be part

of blue-eye damage when the following conditions were met:

1. A drop in red pixel intensity exceeding 15 intensity levels across

a width of six pixels. This must occur in the first 10 pixels of the

24 pixel set.

The rotary DIP switch was used to adjust the pixel intensity

threshold level between values of 60 and 90 in steps of 2 intensity levels. This was needed to adjust for slightly different lighting

for each of the three cameras. In each image, the FPGA counted

each occurrence of the blue-eye conditions outlined above, with

only one count allowed per image line to avoid double-counting of

the same blemish on any given line. Blue-eye damage would usually have 10 or more occurrences while good kernels would usually

have 0 occurrences. The air valve was activated if more than ten

occurrences of the blue-eye damage criteria were met.

All of the image processing for each pixel was performed before

the next pixel data arrived, so there was no further processing, or

delay, after the complete image was acquired. Once the image was

acquired, the FPGA would be ready to start processing a new image

as soon as the next kernel came into the field of view of the camera.

While the FPGA also controlled the opening and closing of the air

valve, this was done in parallel with image acquisition to have the

highest kernel throughput possible.

2.3. Signal processing—red and white wheat

Pearson et al. (2008) showed that the standard deviation of the

red pixels and the number of blue pixels below a set threshold are

two good features for distinguishing red wheat from white wheat

when using color images. Red wheat tends to have higher standard deviations of pixel intensities as they tend to have darker areas

accompanied by lighter, almost white, areas at the beard end. Also,

weathering tends to create light areas on red wheat kernels. The

combination of darker red and lighter white areas drives the pixel

intensity standard deviation higher than more consistently colored

white kernels. Red wheat also has higher counts of blue pixels with

dark intensity levels. This is due to the red kernel pigment absorbing blue light. In contrast, white kernels have lower counts of low

intensity blue pixels, since the white pigment reflects high amounts

of blue light. The FPGA was programmed to compute these two features and classify kernels based on them. The variance of the red

pixels was computed by keeping a running tally of the sum and

sum squared of the red pixel intensities above a threshold level

of 15, which segmented the kernel from the background. The low

threshold level for counting blue pixels was also 15, but the upper

threshold limit was set by the rotary DIP switch, which ranged from

60 to 121 in steps of 4 intensity levels. By trial and error, kernels

were classified as red if the variance was greater than 2500 and the

count of red pixels with low intensities was greater than 50. The

sorter was calibrated by adjusting the rotary DIP switch until an

accurate sort was achieved.

2.4. Sample source and sorter testing

Popcorn samples were supplied by a major popcorn processor,

and were pulled from two storage bins known to have high incidences of blue-eye damage. One fifty-pound sack was pulled from

each bin. Subsamples from these sacks were inspected by hand

and 100 blue-eye damaged kernels and 1000 good kernels were

removed for sorter testing. After the FPGA’s were programmed, the

good kernels were fed through the sorter with a lens cap over two

of the cameras. The rotary DIP switch on the camera without the

lens cap was adjusted until very few good kernels were rejected.

The procedure was then applied to the other cameras. This was

done to account for slightly different lighting conditions for each

�Author's personal copy

16

T. Pearson / Computers and Electronics in Agriculture 69 (2009) 12–18

Fig. 4. Images of blue-eye damaged (left) and good (right) popcorn kernels. The top images are normal color images while the lower images are of the red pixels only. Note

that the blue-eye damage in the germ area has somewhat better contrast from the germ and kernel in the red pixel image than the color image. The blue-eye damage is

highlighted in the pixel intensity profile plots (bottom), causing a deep “valley” in the plot.

camera. After setting the DIP switches, the 100 blue-eye damaged

kernels and 1000 good kernels were mixed and run through the

system at a rate of approximately 75 per second. The fractions of

blue-eye and good kernels in the reject and accept streams were

then counted.

The red and white wheat were of the Jagger and Blanca Grande

varieties, respectively. A sample of the red wheat was run through

the sorter and the rotary DIP switches were adjusted to minimize rejection of red wheat. This was performed one camera at

a time using the same procedure as with the popcorn. Next, a

200 g mixture of 90% red and 10% white wheat was run through

the sorter at approximately 75 kernels/s. The amount of red and

white kernels in each of the accept and reject streams were then

weighed.

�Author's personal copy

T. Pearson / Computers and Electronics in Agriculture 69 (2009) 12–18

3. Results and discussion

3.1. Popcorn

The sorter was able to separate 74% of the popcorn with blue-eye

damage while also rejecting, erroneously, 9% of the good popcorn.

Thus, it was 91% accurate on the good popcorn. The 9% false positive rate is acceptable since blue-eye damage usually occurs in a low

percentage of storage bins every year. Therefore, only 9% of a small

fraction of the entire popcorn stored would be downgraded into

the lower valued blue-eye class. Inspection of the good kernels that

were rejected by the sorter revealed that approximately 20% (1.8%

of the total good kernels) had at least one small dark spot elsewhere

on the kernel not associated with blue-eye. It may be desirable for a

popcorn processor to remove these kernels in order for their product to have an appealing appearance. Since it takes 6.4 ms to acquire

an image, process it and classify it, the sorter should have a theoretical throughput of 156 kernels/s. However, this assumes that the

kernels would be perfectly spaced apart. If two kernels are touching

as they enter the camera field of view, then both may be rejected

if one of them is classified as blue-eye damaged. It was found by

trial and error that these types of errors could be minimized if the

kernel throughput did not exceed 75 kernels/s, approximately half

of the theoretical maximum throughput rate. A throughput rate

of 75 popcorn kernels/s is approximately equivalent to 40 kg/h (or

1.5 bushels/h). At this rate, one sorter could process a 10,000 bushel

bin in approximately 278 days if it runs continuously for 24 h a day.

Since blue-eye damage does not occur in every storage bin, a typical

large popcorn processor may need to sort only two of three storage

bins per year. The cost of all of the parts for one sorting system is

approximately $2000, so having more than one sorter running in

parallel may be a viable option.

3.2. Wheat

The accuracy achieved by the system was 88% for red wheat

and 91% for white wheat. These accuracies are more than 10–20%

above what can be accomplished after passing wheat through a

commercial color sorter several times (Pasikatan and Dowell, 2003).

However, the accuracies are about 5% below what has been accomplished using three similar features extracted from color images

using a traditional camera and personal computer to do the image

processing (Pearson et al., 2008). Classification on that system was

made using a discriminant function. However, the system using the

personal computer has a lower throughput rate of approximately

30 kernels/s, a higher initial cost, and is probably not as physically

robust.

3.3. General discussion

The throughput of 75 kernels/s per channel approximates that

of high-speed commercial color sorters and is substantially higher

than what has been developed so far using traditional cameras connected to personal computers that perform the image processing

(Pearson et al., 2008). Traditional cameras may output images of

similar resolution at rates of 60 frames per second, but inspection

rates are about half (30 kernels/s) due to kernel feeding limitations.

Since the camera is directly linked to an FPGA, no personal computer is needed other than for compiling the FPGA software and

loading it onto the non-volatile memory on the FPGA board. The

FPGA then reads this memory at power up. This should make the

FPGA/image sensor combination more robust and better able to

withstand processing plant environments that can be hot, humid,

and dusty. The FPGA and camera do not require any ventilation for

cooling, so they can be enclosed in a sealed case. It is anticipated

that the rotary DIP switches would need to be adjusted periodically

to account for lighting fluctuations. This is not a time-consuming

17

process, however. A standard reference sample could be used to

perform this adjustment, and it probably would not need to be

performed more than once per week (Pearson et al., 2008).

This work has demonstrated that use of a color image sensor

directly linked to an FPGA can facilitate sorting accuracies that are

much higher than what can be accomplished with commercial color

sorting machines. Some defects found on grains, such as blue-eye

damage on popcorn, cannot be detected by color sorting machines

but can be detected with reasonable accuracy by use of simple

image processing. Likewise, the process of separating red and white

wheat can be made more accurate than what can be accomplished

by color sorters, by use of both the red and blue spectral bands

and very simple image processing to compute the standard deviation and counts of certain colored pixels within specified intensity

ranges.

While this study demonstrated the use of this imaging hardware,

more work is needed to automatically account for lighting fluctuations, to extract more features to achieve higher sorting accuracies,

to use more elaborate classification techniques, and to make the

system physically more robust. It has been shown that accuracies for separating red and white wheat can be increased to about

96% when three image histogram features are extracted from color

images and discriminant functions are used to perform classifications. While extracting more features can be easily performed on

the FPGA, there is currently no easy way to enter new discriminant

function parameters. This could be overcome by adding the capability to store images to removable flash memory so that they can

be easily transferred to a personal computer, where new discriminant functions can be developed and their parameters loaded back

to the flash memory that the FPGA reads at start-up. Accuracy for

popcorn could likely be improved by more elaborate image processing and by using other colored pixels as well. The FPGA chip

used in this study has approximately 2900 logic elements that can

be programmed. The popcorn and wheat programs used 880 and

950 logic elements, respectively. Thus, the FPGA has the capability

to perform more elaborate computations, especially since minimal

effort was made to make efficient use of available logic elements.

Other FPGAs with much higher amounts of programmable logic

elements are also available.

Grain throughput of the sorting system could be greatly

improved for some products by re-configuration of the cameras

so that only two are used, one above and one below a “waterfall” of grain sliding down several closely spaced parallel chutes.

The images acquired in this study were 640 pixels wide, yet each

popcorn kernel was no more than 90 pixels wide. Thus, five or six

streams of grain could be inspected, lowering the overall cost and

space requirements of the sorters. As discussed earlier, if only a few

simple image features are extracted, the FPGA chip used should

have enough logic elements to process several kernels in parallel.

This technique may work well for kernels having an overall color

difference, such as red and white wheat. However this configuration

would not inspect the entire perimeter of the kernels, so accuracy

in the detection of small localized defects, such as blue-eye damage,

would suffer. Further research is needed to quantify the trade-off

of higher throughput vs. accuracy, as well as lower cost vs. accuracy. Nevertheless, a sorter in this configuration might find many

other applications such as segregating mixed species of crops, such

as wheat and soybeans, weed seeds, broken/damaged corn kernels,

durum kernels with black-tip, durum virtuousness, and off color

sorghum due to fungal damage or weathering. These applications

will be a basis for future research.

4. Conclusion

Simple image processing can be executed in hardware on FPGA

chips directly linked to image sensors. This combination makes

�Author's personal copy

18

T. Pearson / Computers and Electronics in Agriculture 69 (2009) 12–18

an economical system for the inspection of agricultural products,

which until now has not been reported. While commercial color

sorters can do an excellent job of removing highly discolored product, their accuracy may not be desirable when the defect is very

small or the color difference is slight. This study showed that the

image sensor/FPGA combination can improve sorting accuracy of

these types of defects or color differences, as illustrated with popcorn damaged with blue-eye and separation of red and white wheat.

Since all processing is done in hardware where many image processing steps are performed in parallel, kernel throughput rates are

nearly double from what has been accomplished so far using traditional cameras with processing performed on personal computers.

Parts for the system are lower in cost and physically more robust

than systems using personal computers, so they might be more

suitable for processing plant environments.

Acknowledgements

Mention of trade names or commercial products in this publication is solely for the purpose of providing specific information

and does not imply recommendation or endorsement by the U.S.

Department of Agriculture.

References

Bennedsen, B.S., Peterson, D.L., 2004. Identification of apple stem and calyx using

unsupervised feature extraction. Transactions of the ASAE 47 (3), 889–894.

Delwiche, S.R., Pearson, T.C., Brabec, D.L., 2005. High-speed optical sorting of soft

wheat for reduction of deoxynivalenol. Plant Disease 89 (11), 1214–1219.

Kumar, P.A., Bal, S., 2007. Automatic unhulled rice grain crack detection by X-ray

imaging. Transactions of the ASABE 50 (5), 1907–1911.

Maxfield, C.M., 2004. The Design Warrior’s Guide to FPGAs. Newnes Press, Burlington, MA.

Pasikatan, M.C., Dowell, F.E., 2003. Evaluation of a high-speed color sorter for segregation of red and white wheat. Applied Engineering in Agriculture 19 (1),

71–76.

Pearson, T.C., 2006. Low-cost bi-chromatic image sorting device for grains. ASABE

Paper No. 063085. ASABE, St. Joseph, MI.

Pearson, T.C., Brabec, D.L., Haley, S., 2008. Color image based sorter for separating

red and white wheat. Sensing and Instrumentation for Food Quality and Safety

2008 (2), 280–288.

Phillips Semiconductor, 2000. The I2 C-Bus Specification. Document # 9398-39340011, Phillips Semiconductor Inc., Amsterdam, the Netherlands.

Takayanagi, I., 2006. CMOS image sensors. In: Image Sensors and Signal Processing

for Digital Still Cameras. Taylor and Francis Group, Boca Raton, FL, pp. 143–178.

Wang, N., Zhang, N., Dowell, F.E., Pearson, T.C., 2004. Determination of durum wheat

vitreousness using transmissive and reflective images. Transactions of the ASAE

48 (1), 219–222.

Yamada, T., 2006. CCD image sensors. In: Image Sensors and Signal Processing for

Digital Still Cameras. Taylor and Francis Group, Boca Raton, FL, pp. 95–142.

�