THE NO SUSPECT CASEWORK

DNA BACKLOG REDUCTION PROGRAM

EXECUTIVE SUMMARY

Forensic DNA evidence has tremendous potential to improve the

criminal justice system. Use of DNA evidence has solved numerous criminal

cases that could not have been solved with traditional law enforcement

techniques, and in a number of cases has exonerated persons charged with

or convicted of crimes they did not commit. However, DNA currently is not

being used to its full potential due to several factors, including a significant

backlog of cases awaiting analysis in state and local laboratories and at law

enforcement agencies across the country. A report submitted to Congress

by the Attorney General in April 2004 estimated that over 540,000 criminal

cases with biological evidence were awaiting DNA testing in state and local

laboratories and at law enforcement agencies.1 Those cases include 52,000

homicides and 169,000 sexual assaults.

To aid in reducing this casework backlog, the Department of Justice,

Office of Justice Programs (OJP), National Institute of Justice (NIJ),

developed and is administering the No Suspect Casework DNA Backlog

Reduction Program (Program), which provides funding to state laboratories.

The purpose of this funding is to help the state laboratories identify, collect,

and analyze DNA samples from evidence collected in cases where no suspect

has been identified or in which the original suspect has been eliminated.

Our audit examined OJP’s Program oversight and administration, Program

participant’s compliance with requirements, and the allowability of costs

charged to the Program.

Background

The Program’s mission is “to increase the capacity of state laboratories

to process and analyze crime-scene DNA in cases in which there are no

known suspects, either through in-house capacity building or by outsourcing

to accredited private [contractor] laboratories.” The Program was

1

The report, National Forensic DNA Study Report, was the result of a study

conducted by Washington State University and Smith Alling Lane, a Tacoma, Washington,

law firm.

�authorized under the DNA Analysis Backlog Elimination Act of 2000, and was

initiated with a Solicitation for applications to be submitted by

September 2001. Due primarily to the events surrounding September 11,

2001, the Solicitation deadline was extended into FY 2002. Therefore, while

the first year of the Program technically was FY 2001, the first grant

applications were received and reviewed in FY 2002, and the first awards

were issued toward the end of that fiscal year.2 OJP awarded approximately

$28.5 million in this first year of the Program.

A total of 25 states received awards in the Program’s first year (see

page 5 for the complete listing of the grantees and award amounts). In

many cases, these grantees teamed with co-grantees in their state (such as

local laboratories or law enforcement agencies that received funding from

the award to the Program grantee).

Program grantees received funding for the analysis of over 24,700

no-suspect DNA cases in the Program’s first year. Each award was to be

used for the processing and DNA analysis of no-suspect cases, defined by

OJP as cases in which there is biological evidence from a crime but for which

no suspect has been identified. Analysis could be conducted either

“in-house” by the DNA laboratories within the grantee’s state, by

outsourcing to state or local laboratories outside of the grantee’s state, by

outsourcing to contractor laboratories, or some combination of these

methods. In addition, Program funding could be used to purchase supplies

and equipment and to pay overtime for the processing of no-suspect

casework.

DNA profiles that result from Program-funded analysis are to be

entered into the Combined DNA Index System (CODIS) so that those profiles

can assist in solving crimes. CODIS is a national DNA information

repository, maintained by the Federal Bureau of Investigation, that allows

local, state, and federal crime laboratories to store and compare DNA

profiles from crime-scene evidence and from convicted offenders. As of

April 2004, the national CODIS database contained 1,762,005 DNA profiles.

CODIS is used by participating forensic laboratories to compare DNA profiles,

with the goal of matching case evidence to other previously unrelated cases

or to persons already convicted of specific crimes.

2

Throughout this report, we use “FY 2001” to refer to the first year of the Program,

since it was in that fiscal year that the Program was initiated. We acknowledge that the first

year of the Program was primarily implemented during FY 2002.

– ii –

�Audit Approach

We audited the Program to evaluate the: 1) progress made toward

the achievement of Program goals; 2) administration and oversight of the

Program by OJP; 3) oversight of outsourcing laboratories by states receiving

Program funds; and 4) allowability of costs charged to Program awards.

While the Program will span multiple years, our audit focused on grants

awarded during the first year of the Program. Grantee use of these funds, in

many instances, is still on-going.

We reviewed documentation at OJP, conducted audits of four grantees

and various co-grantees within those grantee states,3 and examined

procedures of three contractor laboratories.4 The four grantees that we

audited had received approximately 47 percent of the FY 2001 Program

funding to pay for analysis of approximately 10,900 additional cases. In

addition, the three contractor laboratories selected for review received

contracts to provide DNA analysis services for 13 of the 19 grantees that

outsourced analysis of their no-suspect cases.5

At each of the four grantees that we audited, we reviewed policies and

procedures, documentation of DNA profiles contained in CODIS, reports

describing the required onsite visits that grantees made to their contractor

laboratories, and other compliance documentation. We reviewed this

information to determine whether each grantee and co-grantee: 1) had

adequate policies for chain-of-custody, evidence handling, quality control,

and data review; 2) was uploading completed DNA profiles to CODIS in a

timely manner; 3) was adequately monitoring their contractor laboratories;

4) was in compliance with relevant sections of the Quality Assurance

3

We audited state and local laboratories in Ohio, Texas, New York, and Florida.

4

We examined procedures at The Bode Technology Group in Springfield, Virginia;

Orchid Cellmark in Germantown, Maryland, and in Dallas, Texas; and LabCorp in Durham,

North Carolina.

5

Six of the 25 grantees (Kansas, Missouri, Maine, New Hampshire, Connecticut,

Delaware) did not outsource the analysis of their no-suspect cases to contractor

laboratories.

– iii –

�Standards for Forensic DNA Testing Laboratories (QAS) 6 effective October 1,

1998; and 5) was accredited or certified and had a technical leader on staff.

We also audited each of these grantees’ Program awards to determine

whether costs charged to each award were allowable and properly

supported, and whether each grantee was in compliance with selected award

conditions. Those conditions included accurate and timely reporting,

utilization of drawdowns, budget management and control, and contractor

laboratory monitoring. We issued four separate audit reports that detailed

the results of these individual audits.7

At each of the three selected contractor laboratories, we reviewed

chain-of-custody and evidence handling policies and procedures, conducted

laboratory tours, and reviewed other documentation to determine if the

laboratory was in compliance with key Program requirements. Those

requirements include maintaining current accreditation, adhering to relevant

sections of the QAS, having an onsite technical leader, and maintaining

controls over billing.

We also reviewed OJP’s oversight of the Program to determine if

awards were made in accordance with applicable legislation, and whether

OJP adequately monitored grantee activities and compliance with Program

requirements. In addition, we assessed OJP’s efforts to monitor progress

made toward achievement of the Program’s stated mission.

The results of the various aspects of our auditing work are described in

the following section.

6

The Quality Assurance Standards for Forensic DNA Testing Laboratories (QAS)

provide DNA casework (forensic) laboratories with minimum standards they should follow to

ensure the quality and integrity of the data and competency of the laboratory. Recipients of

Program funding must also certify that DNA analysis performed with that funding will

comply with the QAS. Additional details on the QAS are found in Appendix III of this report.

7

See Appendix I for additional audit report information.

– iv –

�Summary of Findings and Recommendations

Assessment of Program Achievements

In evaluating OJP’s progress, we concluded that while Program

grantees were funded for analyses of over 24,700 backlogged

no-suspect cases, current data does not reveal whether increased laboratory

capacity to process and analyze no-suspect cases is being met, particularly

for those states that are strictly outsourcing DNA analyses.

Our analysis of data we collected from four grantee states indicated an

increase in their forensic profiles uploaded to the national CODIS database

during the period of their Program awards. However, this data did not

distinguish between profiles from Program-funded no-suspect cases and

other DNA uploads. For example, it is unclear from the data whether the

increase in uploads is due to the Program funding, or whether it is because

the laboratory hired, with its own funding, additional staff who helped

increase productivity. Therefore, the data is inconclusive with regard to the

achievement of the Program’s mission.

In addition, we noted two issues that appear to affect the Program’s

success and impact:

1)

Only 41 percent or approximately $11.6 million of the

$28.5 million of FY 2001 Program funds awarded were drawn

down as of May 31, 2004, nearly two years after awards were

made.

In our judgment, significant delays in drawing down funding

serve as indicators that state grantees are not using Program

funds to increase their analytic capacity and reduce the backlog.

Untimely implementation of each grantee’s planned activities

hinders the entire Program from achieving its objectives.

Further, funds obligated and not drawn down by Program

grantees in a timely manner prevent other viable DNA programs

or Program grantees with more immediate needs from utilizing

the funds.

2)

Several profiles that resulted from Program-funded analysis had

not been uploaded to CODIS as of our review. This was caused

–v–

�primarily by delays in conducting required quality control reviews

of the data. In some cases, nearly a year had passed since

completed DNA profiles were returned by the contractor

laboratories, yet they still had not been uploaded to CODIS.

The crime-solving potential of these profiles cannot be realized

until they are uploaded into CODIS, where they can be matched

to convicted offenders or other crime-scene evidence.

We also identified some weaknesses in OJP’s development of Program

goals and in its monitoring of progress toward the achievement of the

Program’s mission. First, at the time our audit began, OJP had not

developed formal goals or objectives for the Program. Subsequent to our

inquiries, Program officials provided us with a list of newly established goals

and objectives for the Program. Neither the performance measurements nor

the new Program goals monitored uploaded profiles, statistics which we

believe would be helpful to Program management in monitoring the

Program’s progress. In addition, neither addressed the Program’s mission of

increased laboratory capacity. While Program management did make an

attempt to revise the measurements to better reflect the Program’s

progress, we concluded that the proposed new measurements still would not

have generated the type of data that would allow Program management to

track the Program’s progress toward achieving its mission.

We recommend that OJP: 1) ensure Program-funded DNA profiles are

reviewed and uploaded to CODIS in a timely manner; 2) develop and follow

procedures that will allow Program officials to more closely monitor grantee

drawdowns, as a means to ensure that adequate progress is being made

toward the achievement of each grantee’s goals, and objectives; and

3) develop Program performance measurements, goals and objectives that

support and allow for the monitoring of progress toward the achievement of

the Program’s mission.

OJP Administration and Oversight of the Program

We reviewed the OJP’s administration and oversight of the Program

and determined that weaknesses existed in three areas.

First, OJP issued second-year Program grants to states that had not

drawn down, as of the time the awards were issued, any of their first-year

– vi –

�Program grant funds. Specifically, in FY 2003 OJP awarded seven grants for

the second year of the program, totaling $10.2 million, to states that had

drawn down essentially none of their initial awards totaling $11.8 million.

We question OJP’s awarding these additional funds to states that had not yet

shown an ability to draw down their prior Program funds in a timely manner.

Second, the requirements instituted by Program management for

contractor laboratories performing no-suspect casework analysis were

inconsistent with those required for state and local laboratories performing

the same work. Specifically, contractor laboratories are required to be

accredited or certified by specific independent organizations, and to have a

technical leader onsite. These conditions are not required for state or local

laboratories that participate in the Program. During our review, we found

five laboratories in the states of Ohio and Texas that were performing

Program-funded no-suspect casework analysis but did not meet one or both

of these requirements. In addition, we were unable to determine from

documentation maintained by OJP whether all co-grantees in six additional

grantee states met these same requirements. We believe that the level of

scrutiny placed upon the contractor laboratories should similarly be placed

upon the state and local laboratories.

Third, OJP has failed to ensure that the federal funds granted under

the Program will benefit the national DNA database. Specifically, we

identified one laboratory, the Fort Worth, Texas, Police Department, that

was uploading Program-funded profiles to Texas’ State DNA Index System

(SDIS, the state level of the CODIS system) but those profiles were not

being uploaded to the National DNA Index System (NDIS, the national level

of the CODIS system). Only profiles uploaded to NDIS are able to aid

investigations across state lines. Therefore, failing to upload to NDIS limits

the crime-solving potential of the profiles. Upon further inquiry, we were

informed that Fort Worth’s profiles could not be uploaded to NDIS based

upon a decision made by the FBI’s NDIS Program Manager. Specifically, the

Fort Worth Police Department, due to the closure of their DNA laboratory,

had hired two contractors, one to analyze the no-suspect cases, and one to

review the data produced by the first contractor and upload that data to

CODIS. In December 2003, the Fort Worth Police Department was notified

by the NDIS Program Manager that its data analysis contractor did not have

the authority to upload forensic profiles for them. Since OJP’s requirements

for the Program only state that profiles are to be uploaded to CODIS (a term

that encompasses the entire database system of indexes at the local, state,

and national level), the Fort Worth Police Department was able to use

– vii –

�Program-funded contractor services without violating OJP requirements,

even though the resultant profiles could not be added to NDIS. We take

issue with such an arrangement, and believe that viable profiles (complete

and allowable) that result from federal funding awarded by OJP should be

uploaded to the NDIS for comparison with DNA profiles from other NDIS

laboratories. During our audit, the Fort Worth Police Department took action

to remedy the arrangements it had for data review, to ensure the profiles

could be added to NDIS. However, the failure of OJP to ensure that all

viable profiles be uploaded to NDIS remains.

We recommend that OJP: 1) more closely monitor previous grantees’

progress in drawing down grant funds prior to awarding them additional

funding; 2) continue to pursue de-obligation of funds for Program grantees

that have shown their inability to draw down their Program funds in a timely

manner and that are unable to provide satisfactory evidence that they will

be able to do so in the near future; 3) ensure that Program requirements in

future years require all laboratories analyzing no-suspect cases to meet the

same requirements; and 4) ensure that Program requirements encourage

and clarify that the expectation for grantees is ultimately the upload of

profiles to NDIS.

Grantee Oversight of Contractor Laboratories

In assessing the adequacy of grantee oversight of contractor

laboratories, we identified four grantee/co-grantee laboratories that did not

maintain adequate documentation to substantiate that their oversight of

their contractor laboratories met certain requirements imposed by the QAS.

Specifically, these laboratories could not substantiate that a complete onsite

visit of their contractor laboratory had been conducted or that their

contractor’s on-going compliance with applicable standards had been

confirmed.

In addition, six laboratories, including three grantee/co-grantee

laboratories and three contractor laboratories, had incomplete or outdated

outsourcing policies or procedures relating to chain-of-custody or evidence

handling of no-suspect cases. For example, the written policies of each of

the three grantee/co-grantee laboratories failed to describe fully the

procedures currently in use for outsourcing no-suspect casework evidence.

In each instance, the procedures staff used, as described to us, appeared

sufficient to safeguard no-suspect casework evidence. In addition, two

– viii –

�contractor laboratories’ procedures failed to address an aspect of facility

cleaning and decontamination. Finally, one contractor’s procedures failed to

describe methods to properly secure evidence after it had been received and

logged in by the receptionist, but was awaiting the attention of technical

personnel.

Allowability of Grantee Expenditures

We assessed the allowability of costs charged to Program awards by

four grantees. While we found that they materially complied with most

award requirements, we noted deficiencies at all four grantees and found

some costs charged to Program awards that were unallowable and/or

unsupported. As a result, we questioned costs of $111,297 out of a total of

approximately $13.5 million awarded. In addition, we made nine

recommendations addressed to OJP in separate audit reports we issued.

Accordingly, these recommendations were not reiterated in this report.

We also assessed whether selected grantees/co-grantees complied

with Program requirements pertaining to costs paid to contractor

laboratories. We found that one co-grantee was overpaying for services

received from its contractor laboratory, and we questioned $44,640 in

unallowable costs as a result. We recommended that OJP remedy these

questioned costs.

Our audit results are discussed in greater detail in the Findings and

Recommendations section of this report. Our audit objectives, scope, and

methodology, and a list of audited contractor laboratories, grantees, and

co-grantees appear in Appendix I. Audit criteria applied during our work is

described in Appendix III.

– ix –

�THE NO SUSPECT CASEWORK

DNA BACKLOG REDUCTION PROGRAM

TABLE OF CONTENTS

INTRODUCTION .................................................................................. 1

The Combined DNA Index System ........................................................ 2

Determining the National Casework Backlog .......................................... 2

The No Suspect Casework DNA Backlog Reduction Program..................... 3

Program Background ............................................................... 4

Program Structure .................................................................. 8

FINDINGS AND RECOMMENDATIONS ................................................... 10

I.

Program Impact and Achievement of Program Goals .....................10

Impact of the Program on Laboratory Capacity ......................... 10

Untimely Utilization of Program Funds...................................... 12

Profiles Not Uploaded to CODIS .............................................. 14

Program Goals and Performance Measurements ........................ 18

Recommendations ................................................................ 21

II.

Administration and Oversight of the Program ...............................22

Additional Funds to Grantees not Drawing Down Initial

Funds Timely ....................................................... 23

Inconsistent Requirements for Laboratories Performing

No-suspect Casework Analysis ............................... 25

�Failure to Ensure Program Funding to Support the

National DNA Database ......................................... 27

Recommendations ................................................................ 29

III.

Grantee Oversight of Contractor Laboratories ...............................31

Inadequate Contractor Oversight Documentation ...................... 31

Incomplete or Outdated Policies and Procedures........................ 34

Recommendations ................................................................ 40

IV.

Allowability of Costs Charged to Program Awards..........................42

Ohio Bureau of Criminal Identification and Investigation............. 43

Texas Department of Public Safety .......................................... 45

Florida Department of Law Enforcement................................... 45

New York State Division of Criminal Justice Services .................. 47

Recommendations ................................................................ 51

STATEMENT ON COMPLIANCE WITH LAWS AND REGULATIONS ............... 52

DNA Identification Act of 1994 ............................................................52

DNA Analysis Backlog Elimination Act of 2000 .......................................53

STATEMENT ON MANAGEMENT CONTROLS ........................................... 54

OBJECTIVES, SCOPE, AND METHODOLOGY ........................................... 55

GLOSSARY OF TERMS AND ACRONYMS ................................................ 62

�AUDIT CRITERIA ............................................................................... 66

Federal Legislation .............................................................................66

Quality Assurance Standards...............................................................66

Solicitation Requirements ...................................................................67

OJP Financial Guide............................................................................68

OJP RESPONSE TO AUDIT RECOMMENDATIONS..................................... 70

ANALYSIS AND SUMMARY OF ACTIONS NECESSARY TO CLOSE REPORT78

�INTRODUCTION

A key objective of the Department of Justice’s (Department) strategic

plan is to improve the crime fighting and criminal justice administration

capabilities of state and local governments. The use of DNA profiles

(computerized records containing DNA characteristics used for identification)

has become an increasingly important crime-fighting tool, and the

Department has created funding opportunities to assist state and local

governments in implementing, expanding, or improving their use of DNA

technology. The Office of Justice Programs (OJP), National Institute of

Justice (NIJ), is the primary Department component disseminating these

funds.

The NIJ, through its Office of Science and Technology, supports

research, development, and improvements in the fields of forensic sciences.

The Office of Science and Technology’s Investigative and Forensic Sciences

Division (IFSD) operates the DNA Backlog Reduction Program with the goal

of eliminating public crime laboratories’ backlogs of DNA evidence.

The NIJ’s DNA Backlog Reduction Program has two components: the

Convicted Offender DNA Backlog Reduction Program, which provides funding

to states to outsource analyses of convicted offender samples to contractor

laboratories; and the No Suspect Casework DNA Backlog Reduction Program

(Program), which provides funding to identify, collect, and analyze DNA

samples from evidence collected in no-suspect cases. The NIJ defines a

no-suspect case as a case in which there is biological evidence from a crime

but where no suspect has been identified or the original suspect has been

eliminated. Our audit focused on the administration and operations of the

program relating to no-suspect cases.8

When no-suspect cases are analyzed, the resulting DNA profiles are

compared to local, state, and national DNA databases to search for matches

with profiles from other crime scenes or from convicted offenders. These

comparisons are conducted through the national network of DNA databases,

referred to as the Combined DNA Index System (CODIS), which we discuss

on the following page.

8

We previously audited the program related to convicted offender samples. For the

results of this audit see Audit Report No. 02-20, The Office of Justice Programs Convicted

Offender DNA Sample Backlog Reduction Grant Program, issued in May 2002.

–1–

�The Combined DNA Index System

CODIS is a national DNA information repository that allows local, state,

and federal crime laboratories to store and compare DNA profiles from

crime-scene evidence and from convicted offenders. The Federal Bureau of

Investigation (FBI) oversees CODIS and provides participating laboratories

with special software that organizes and manages its DNA profiles and

related information. Through a hierarchy that encompasses national, state,

and local indexes, CODIS identifies matches between DNA profiles from case

evidence and a convicted offender or evidence from multiple crime scenes.

DNA profiles are uploaded into the national index (the National DNA

Index System or NDIS) from the state indexes (SDIS), and from the local

indexes (LDIS) into SDIS. The forensic laboratories at each level of the

CODIS hierarchy decide which DNA profiles to upload to the next level, and

conversely the state and national levels determine – generally based upon

applicable state and federal legislation – which profiles they will accept from

the local and state indexes.

Currently, CODIS contains two primary databases: the convicted

offender database and the forensic database which contains the case

evidence profiles. As of April 2004, NDIS contained 1,681,700 convicted

offender profiles and 80,300 forensic profiles.

The FBI measures the effectiveness of CODIS by tracking the number

of investigative leads that have been provided through CODIS’ match

capabilities. As of April 2004, the FBI reported a total of 16,695

investigations aided by CODIS.9

Determining the National Casework Backlog

The current DNA casework backlog is significant. A report submitted

to Congress by the Attorney General in April 2004 estimated that over

540,000 criminal cases with biological evidence were awaiting DNA testing in

state and local laboratories and at law enforcement agencies across the

9

CODIS's primary metric, the "Investigation Aided," is defined by the FBI as a case

that CODIS assisted by producing a match between profiles (i.e., linking two cases together,

or linking a case profile to an offender profile) that would not otherwise have been

developed.

–2–

�country.10 Those cases include 52,000 homicides and 169,000 sexual

assaults.

However, determining the full extent of the backlog is complicated by

the fact that there are more than 17,000 law enforcement agencies that

potentially could be retaining untested forensic DNA evidence. Only about

10 percent of the estimated backlog of casework samples have been

submitted to state or local crime laboratories. Further, even if law

enforcement agencies submitted these cases to state and local crime

laboratories, most of those laboratories lack sufficient evidence storage

facilities for the resulting volume of evidence. In addition, state and local

laboratories have been challenged financially, have had difficulty filling

positions with qualified candidates, and already have a backlog of evidence

awaiting analysis from cases already submitted. Police departments often

retain evidence samples without submitting them because they believe that

crime laboratories will not accept the samples or would be unable to analyze

them.

Because of the difficulty of quantifying the no-suspect casework

backlog, our audit could not determine the impact that the Program had on

reducing this backlog.

The No Suspect Casework DNA Backlog Reduction Program

The Program was developed to assist states in reducing the number of

untested no-suspect cases so that the resultant DNA profiles could be

uploaded to CODIS. The Program was authorized under the DNA Analysis

Backlog Elimination Act of 2000. According to the NIJ, the mission of the

Program is “to increase the capacity of state laboratories to process and

analyze crime-scene DNA in cases in which there are no known suspects,

either through in-house capacity building or by outsourcing to accredited

private [contractor] laboratories.”

The Program was initiated with a Solicitation for applications to be

submitted by September 2001. However, due primarily to the events

surrounding September 11, 2001, the Solicitation deadline was extended

into FY 2002. Therefore, while the Program was initiated in FY 2001, the

10

The report was based on a study conducted by Washington State University and

Smith Alling Lane, a Tacoma, Washington, law firm.

–3–

�first grant applications were received and reviewed in FY 2002, and the first

awards were issued toward the end of that fiscal year.11

Sources of the Program’s $28.5 million in funding included a portion of

$15.3 million transferred by the Attorney General from the Asset Forfeiture

Fund Super Surplus,12 and a portion of $20 million appropriated in FY 2002

by Congress as part of funding for programs authorized under the DNA

Analysis Backlog Elimination Act of 2000. A total of 27 states13 applied for

awards in the Program’s first year, and 25 received awards.14

Program Background

The 25 states that received funding proposed to analyze over 24,700

no-suspect cases using grant funds. The following table details the total

grant awards that each state received and the number of no-suspect cases

that they proposed to analyze with the funding:

11

Throughout this report, we use “FY 2001” to refer to the first year of the Program,

since it was in that fiscal year that the Program was initiated. We acknowledge that the

Program was primarily implemented during FY 2002.

12

The Asset Forfeiture Fund Super Surplus in the Department contains excess

end-of-year monies that the Attorney General can use for authorized purposes.

13

For the sake of simplicity, we use the term “state” throughout this report to

include both states and U.S. territories, such as Puerto Rico.

14

Of the 27 states that initially applied for awards in FY 2001/2002, one state

withdrew its application and another state decided to withhold its application until the

second year of the Program. The remaining 25 states all received awards.

–4–

�TABLE 1

PROGRAM GRANTEES AND FUNDS AWARDED

Grantee

State

Maryland

New York

Texas

Florida

Ohio

Wisconsin

Michigan

Arizona

Massachusetts

Alabama

New Mexico

Illinois

Oklahoma

Kansas

Maine

Missouri

Indiana

Kentucky

New Jersey

Nebraska

Puerto Rico

Delaware

Connecticut

New Hampshire

Vermont

TOTALS

FY 2002 Funds

Awarded

$5,048,669

5,039,535

3,379,688

2,795,086

2,254,088

1,630,000

1,471,170

1,052,282

917,030

690,246

550,245

500,000

500,000

377,176

376,554

348,412

303,558

291,543

286,805

226,494

131,678

129,413

117,163

71,716

20,829

$28,509,380

Cases

Funded15

3,704

3,146

3,160

1,500

3,068

850

1,359

1,729

1,000

463

785

400

500

450

300

513

203

400

420

100

60

48

300

250

30

24,738

TP

PT

Source: Office of Justice Programs

Grantees could use Program funding to analyze no-suspect cases in

several different ways:

•

“In-house” by DNA laboratories within the grantee’s state;

•

Outsource analysis to state or local laboratories outside of the

grantee’s state;

15

This number represents the total number of no-suspect cases that grantees

proposed would be analyzed with Program funds, through both outsourcing and in-house

analysis.

TP

PT

–5–

�•

Private laboratories; or

•

Any combination of the above.

The methodologies proposed by the states for the analysis of no-suspect

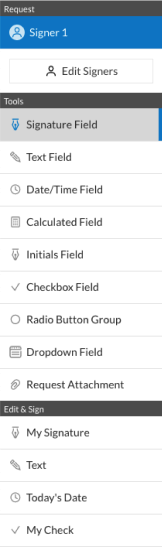

cases are illustrated in the following graphic:

FIGURE 1

Program Summary – FY 2001

Non-Participant

PR

In-House Analysis Only

Outsourcing Only

In-House and Outsourcing

Source: Office of Justice Programs

As shown above, six grantees chose to use in-house analysis only

(Kansas, Missouri, Maine, New Hampshire, Connecticut, and Delaware). For

the 19 grantees that chose to outsource some or all of their DNA analyses,

the following table details which contractor laboratories they selected:

–6–

�TABLE 2

PROGRAM GRANTEES AND CONTRACTOR LABORATORIES

Grantee

State

Contractor Laboratory Selected

Bode

TP

16

PT

Orchid

18

17 LabCorp

Cellmark

TP

TP

Maryland

PT

Fairfax

19

Identity

√

√

Texas

√

√

Ohio

√

Other

TP

√

√

√

√

√

√

Massachusetts

√

√

√

Illinois

√

Oklahoma

√

√

Indiana

√

Kentucky

√

√

√

Nebraska

√

Puerto Rico

Vermont

16

√

√

New Mexico

New Jersey

√

√

Arizona

Alabama

PT

√

Wisconsin

Michigan

20

PT

√

New York

Florida

TP

√

PT

The Bode Technology Group, Inc. is located in Springfield, Virginia.

TP

17

Orchid Cellmark has U.S. locations in Germantown, Maryland; Dallas, Texas; and

Nashville, Tennessee.

TP

PT

18

PT

Laboratory Corporation of America has 31 locations in the U.S.

TP

19

PT

Fairfax Identity Laboratories is located in Fairfax, Virginia.

TP

20

“Others” include Reliagene Technologies, Inc. in New Orleans, Louisiana;

Identigene in Houston, Texas; Genelex Corporation in Seattle, Washington; GeneScreen in

Dallas, Texas and Dayton, Ohio; DNA Reference Lab, Inc. in San Antonio, Texas; and

University of Nebraska Medical Center in Omaha, Nebraska.

TP

PT

–7–

�In addition to the direct costs of in-house analysis or outsourcing,

Program funds also could be used for a number of other purposes in support

of the analysis of no-suspect cases. These included paying for costs

associated with oversight of contractor laboratories, purchasing supplies and

equipment, or paying for overtime for the processing of no-suspect

casework.

Program Structure

One stated objective of the Program was to foster cooperation and

collaboration among all of the affected governmental agencies and

departments, such as law enforcement agencies, crime laboratories, and

prosecutors. According to the NIJ, the intent of this objective was to

maximize the use of CODIS for solving no-suspect crimes. Therefore,

states with more than one DNA laboratory were required to demonstrate

that all of its laboratories were provided with the opportunity to participate

in the Program. Consequently, while the awards were issued to one agency

within each state, those grantees were required to coordinate and facilitate

the participation of co-grantees as part of its award. Therefore, within this

report and throughout our audit work we included co-grantees (i.e., local

laboratories or law enforcement agencies that also received funds from the

Program), within the scope of our review.

The oversight of the funds distributed by the Program involved various

entities. Specifically:

1)

OJP awarded funds and administered the Program through the

primary grantee in each state;

2)

The primary grantee within each state oversaw the financial

management of each award, including facilitating reimbursement

to each of the co-grantees for their Program-funded

expenditures, and collecting appropriate information from

co-grantees to meet the required reporting obligations to OJP;

and

3)

Each of the grantees, whether the primary grantee or a

co-grantee, was required to have oversight over their own

Program-funded technical operations and activities, as well as

those of any contractor laboratory they used as part of Program

activities.

–8–

�In several states, including Ohio and New York, the primary grantees

formalized its relationship with each co-grantee in the form of a contract or

agreement to ensure that each co-grantee understood their oversight and

compliance obligations.

Each of these layers of accountability is addressed within this report.

Finding I assesses Program achievements. Finding II addresses OJP’s

responsibilities of oversight, Finding III addresses each grantee’s oversight

over technical operations, and Finding IV addresses financial management of

the primary grantee.

–9–

�FINDINGS AND RECOMMENDATIONS

I.

Program Impact and Achievement of Program Goals

We determined that the Program has been successful in funding the

analysis of over 24,700 previously backlogged no-suspect cases, as

projected by Program grantees. However, we were unable to

determine whether the Program was achieving its mission of increasing

laboratory capacity. Further, many grantees experienced lengthy

delays in implementing their proposals and were not drawing down

Program funds on a timely basis. We also determined that while the

Program awards helped to increase the volume of no-suspect profiles

uploaded to CODIS, all four of the individual grantees we audited

experienced delays in uploading completed profiles. Finally, OJP had

not developed substantive Program goals, and the Program’s

performance measurements were not adequate to assess whether it

was achieving its stated mission.

Impact of the Program on Laboratory Capacity

As stated previously, the mission of the Program is “to increase the

capacity of state laboratories to process and analyze crime-scene DNA in

cases in which there are no known suspects, either through in-house

capacity building or by outsourcing to accredited private laboratories.” To

accomplish this, OJP awarded approximately $28.5 million in funding to 25

states for the analysis of over 24,700 backlogged no-suspect cases during

the first year of the Program.

We found that measuring the Program’s progress was complicated by

the lack of definitive data linking Program funding to trends observed in

increased uploads of DNA profiles to NDIS from case evidence. For example,

we collected NDIS upload statistics for each of the four grantees we audited

to determine how the Program awards affected the number of complete21

21

A profile’s completeness is determined by whether it contains all of the points of

information that the FBI requires for an NDIS profile to be considered. Therefore, we only

included complete profiles in our productivity calculations.

– 10 –

�profiles that those states were able to upload to NDIS prior to and during the

award period. Those statistics are illustrated on the following graph:22

FIGURE 2

Forensic Profiles Uploaded to NDIS

4800

4,308

4400

4000

3,701

3,605

3600

3200

2800

2,503

2400

2094

2000

1,780

1600

1,528

1,334

1,055

1200

890

709

800

688

320 338

400

0

0

0

Ohio

2000

Texas

New York

2001

2002

Florida

2003

Source: Program grantees

As the figure illustrates, all four of the grantees demonstrated a

marked increase in total complete profiles analyzed and uploaded to NDIS

after receiving their Program awards. However, since these increases were

inclusive of both the no-suspect cases funded by the Program as well as

other DNA cases that the laboratories were analyzing with local funding, we

cannot conclusively state the extent to which this data establishes that the

Program met its mission. For example, it is unclear from the data whether

the increase in uploads is due to the Program funding, or whether it is

because the laboratory hired, with its own funding, additional staff that

helped increase productivity.

22

Ohio did not join NDIS until November 2000 and had a major computer system

malfunction in 2001, so no profiles went to NDIS in those years. However, profiles for Ohio

uploaded to SDIS from 2000 through 2003 were 136, 558, 1099, and 2084, respectively.

– 11 –

�When considered in conjunction with delays in the drawdown of

funding and delays in the upload of profiles, two issues we discuss later in

this section, it becomes even more apparent that without better data a

concrete determination about the Program’s achievement of its mission is

not possible. For example, for those of our four auditees that had not

materially drawn down Program funding, we would conclude that the

Program did not account for the increase in productivity demonstrated in the

previous chart.

Untimely Utilization of Program Funds

During our audit fieldwork, we noted that many of the grantees had

drawn down very little of their award funds, or in some cases had not drawn

down any funds at all.

As of May 31, 2004, only $11.6 million, or about 41 percent of the

$28.5 million awarded from FY 2001 Program funds, had been drawn down

by the 25 Program grantees. For the four grantees included in our audit,

only $5.9 million of the $13.5 million awarded, or 44 percent, had been

drawn down as of the same date. While these awards were made between

July 2002 and September 2002, the largest grantee in terms of dollars

awarded (Maryland), and two additional grantees (Delaware and

Connecticut), had not drawn down any funds as of May 31, 2004. These

three grantees received awards totaling nearly $5.3 million. The following

chart illustrates the drawdown trends for this Program through May 2004:

– 12 –

�FIGURE 3

Program Funding Drawdowns

28.5

25.7

22.8

20.0

17.1

14.3

11.4

8.6

5.7

2.9

0.0

O

ct

N 02

ov

D 02

ec

Ja 02

nFe 03

bM 03

ar

Ap 03

r-0

M 3

ay

Ju 03

n0

Ju 3

l-0

Au 3

gSe 03

pO 03

ct

N 03

ov

D 03

ec

Ja 03

nFe 04

bM 04

ar

Ap 04

r

M -04

ay

-0

4

(in millions)

Dollars Awarded to Grantees

October 2002 through May 2004

Date

Monthly Drawdowns (All Grantees)

Cumulative Drawdowns (All Grantees)

While the drawdown amounts are not a definitive indicator of specific

grantee Program activities, we believe that drawdowns are an important

indicator of overall grantee progress toward the achievement of proposed

objectives.

For example, the award to the New York State Division of Criminal

Justice Services (DCJS) in the amount of $5.04 million, with a term of one

year, was awarded in September 2002. Yet, as of May 2004, only $500,000

had been drawn down, or less than 10 percent of the award amount.

According to grantee officials, multiple reasons accounted for their delayed

drawdowns, including the time it took to establish separate contracts with

the co-grantees across the state. In many cases, these contracts were not

finalized until August 2003, nearly a year after the 1-year award was made.

Further, grantee officials in New York stated that amounts drawn down may

not be the best indicators of progress actually being made. Because funds

may have been spent or obligated, but not yet drawn down, they believed

that the amount of funds actually spent and obligated would provide a better

gauge. However, as of April 2004, New York reported total funds spent and

obligated of $2.2 million, which is still only 45 percent of the total awarded.

– 13 –

�Further, one co-grantee in the state of New York estimated that its program

will not be completed until December 2004, or 27 months after the initial

1-year award was made.

In another example, the Texas Department of Public Safety (TXDPS),

which had drawn down approximately $2 million of its $3.4 million award as

of May 2004, cited delays in initiating contracts with the co-grantees in its

state as a reason for delays in expending funds. Further, the Florida

Department of Law Enforcement (FDLE), which had drawn down about

$2 million of its $2.8 million award, stated that backlogs at its contractor

laboratories (i.e., contractor laboratories’ inability to process all the cases it

was receiving from various clients, delaying results back to those clients)

were preventing it from expending its remaining award funds. The FDLE

anticipated completing drawdowns in December 2004. Finally, as of May

2004, the Ohio Bureau of Criminal Identification and Investigation (Ohio

BCI&I) had drawn down approximately $1.4 million of its $2.3 million award.

Officials at the Ohio BCI&I cited delays in the submission of

no-suspect cases by law enforcement agencies, and the screening of

evidence by the laboratory for items that were most likely to produce viable

DNA results.

In sum, grantee drawdowns are one gauge of the overall progress

being made toward achieving grantees’ proposed goals. Program awards

were made for an initial period of one year, and the above examples

illustrate that many grantees have not made timely progress in completing

their proposed programs, and have had to obtain extensions from the NIJ.

Not only does this practice hinder the timely achievement of the Program’s

overall mission, but obligated funds not being utilized by this Program could

have been used by other programs or grantees with more immediate needs

for the funding.

Profiles Not Uploaded to CODIS

An additional factor that affects the overall success of the Program is

whether Program-funded profiles are being uploaded to CODIS. During our

audit work at various state and local laboratories, we observed that

approximately 2,538 of the DNA profiles that had resulted from Programfunded analysis had not been uploaded to CODIS. Specifically, we noted

various laboratories in all four grantee states had received back data from

their contractor laboratories for cases analyzed by those contractors, but

– 14 –

�that the resultant DNA profiles had not been uploaded to CODIS as of the

time we reviewed the data.

There is always a delay between when the data is received from a

contractor and when it is uploaded by the state to CODIS. This time lag is

due to the fact that, after receiving the contractor data, states must address

the requirements of the Quality Assurance Standards for Forensic DNA

Testing Laboratories (QAS), effective October 1, 1998, prior to uploading the

data to CODIS. The QAS require that a forensic laboratory ensures that the

data it receives back from its contractor meets certain quality standards. As

part of this, the laboratory must conduct a technical and administrative

review for each case analyzed by the contractor. However, as detailed

below, grantees varied in their ability to address the QAS requirements in a

timely manner.

To assess the reasons that might account for our observation of

profiles not being uploaded to CODIS, we analyzed data provided by the

grantees and co-grantees. As of April 2004, 2,538 profiles from

Program-funded cases returned to the grantees had not been uploaded to

CODIS. We reviewed the reasons provided by the grantees and

co-grantees for this delay and summarized in the following figure.23

23

Due to the unique circumstances regarding the Fort Worth Police Department’s

inability to upload profiles to NDIS, we excluded their results from this analysis. This issue

is further discussed in Finding II of this report.

– 15 –

�FIGURE 4

Reasons Profiles Were Not Uploaded to CODIS

Various Other

Technical Reasons

4%

Awaiting Data

Review

36%

No DNA

34%

Already in CODIS

1%

Mixture*

4%

Insufficient Data

9%

Only Victim

Profile*

12%

Source: Program grantees and CODIS reports

* “Mixture” refers to profiles that reflect DNA from multiple persons and are too complex to be

appropriately included in CODIS. “Only Victim Profile” refers to those profiles where only the victim’s

DNA was found on the evidence. Victim DNA profiles are not permitted in NDIS.

The most common reason provided for profiles not being uploaded was

“Awaiting Data Review.” In its Solicitation for the No Suspect Casework DNA

Backlog Reduction Program (FY 2001) (Solicitation), the NIJ required that

profiles be “expeditiously uploaded into CODIS.” While no standards or

criteria govern how much time grantees are permitted before they should

upload analyzed data to CODIS, profiles that have not been uploaded to

CODIS cannot be compared and matched to other forensic and offender

profiles, limiting the crime-solving benefits that those profiles can have.

We further examined this issue for seven grantees and co-grantees.

We judgmentally selected 25 cases and, as part of a larger review of those

cases, determined the length of time it took to upload the profiles once the

DNA results were returned by contractor laboratories for each case where

resultant profiles were uploaded. The results of that analysis are

summarized as follows:

– 16 –

�FIGURE 5

Average Days for Data Review

200

187

Ohio BCI&I

180

Fort Worth, TX

Police Dept

160

140

Jacksonville, FL

FDLE Lab

122

120

Palm Beach, FL

Sheriff's Office

100

76

80

60

70

68

New York State

Police

Monroe County, NY

Public Safety Lab

40

12

20

Nassau County, NY

Medical Examiner

9

0

Source: Grantee case files and CODIS reports

These results illustrate the vast differences between the various

grantees and co-grantees. For example, the Palm Beach Sheriff’s Office and

the Ohio BCI&I were able to conduct the reviews required by the QAS

necessary for upload to CODIS within an average of 9 days and 12 days,

from the time the analyzed data was returned by the contractor laboratory.

However, it took the FDLE’s Jacksonville laboratory and the Fort Worth Police

Department24 an average of 187 days and 122 days to conduct these reviews

and upload the data to CODIS.

Further, we noted many additional cases where data had not been

reviewed and profiles had not been uploaded that exceeded the times

illustrated above. For example, we noted cases for the FDLE’s laboratories

in Jacksonville and Tampa Bay where analysis results were returned by the

contractor laboratories in June 2003 and August 2003, but the profiles had

not been uploaded to CODIS when we conducted our review in March 2004.

24

The Fort Worth Police Department contracted with the University of Northern

Texas for the data review and upload to CODIS.

– 17 –

�We believe that these data review delays are excessive and not in

accordance with the intent of the Program. DNA profiles not reviewed

cannot be uploaded to CODIS and therefore cannot be linked to other

crime-scene evidence or offender profiles, undermining the mission of the

Program.

The second most common reason, “No DNA,” is the result of

insufficient DNA being detected during the screening process of the evidence

to yield a viable sample for DNA analysis. This reason is not a problem to be

addressed, particularly with old evidence from unsolved crimes, since the

DNA present on the evidence may have deteriorated over time and may not

be of sufficient quantity to yield a DNA profile.

As discussed in the following section, the lack of program goals and

objectives, combined with the previously discussed delays in utilizing

Program funding and in uploading profiles to CODIS, led us to question

whether OJP had established adequate performance measurements to

monitor the Program’s progress.

Program Goals and Performance Measurements

In response to the Government Performance and Results Act, which

requires agencies to develop strategic plans that identify their long-range

goals and objectives and establish annual plans that set forth corresponding

annual goals and indicators of performance, OJP developed one performance

measurement for the Program. The stated mission for the Program is “to

increase the capacity of state laboratories to process and analyze

crime-scene DNA in cases in which there are no known suspects, either

through in-house capacity building or by outsourcing to accredited private

laboratories.” This mission directly supports the following Department

strategic plan goal and objective:

•

Goal: To prevent and reduce crime and violence by assisting state,

tribal, and local community-based programs.

•

Objective: To improve the crime fighting and criminal justice

administration capabilities of state, tribal, and local governments.

We reviewed OJP’s progress toward achieving the single performance

measurement established for the Program: Number of DNA samples/cases

processed in cases where there is no known suspect. For this measurement,

– 18 –

�OJP had set a goal of 24,800 samples/cases for FY 2002. However, due to

various factors, including the events of September 11, 2001, disbursement

of funding for this Program was delayed and not completed until September

2002, and OJP did not meet this measurement. The Program funded the

analysis of 24,738 samples or cases in its first year. According to

information provided by the NIJ, only 10,609 cases had been analyzed as of

December 31, 2003. In FY 2003 and FY 2004, OJP established goals of

33,850 and 43,000 samples or cases, respectively.

Even though the targets established for the Program in FY 2002 were

not achieved, we sought to further analyze the established performance

measurement as it relates to the Program’s mission. While its mission is to

increase the capacity of state laboratories to process and analyze

crime-scene DNA in no-suspect cases, the Program’s performance

measurement merely tracks no-suspect samples or cases that have been

“processed.” We concluded that this measurement does not gauge whether

the Program is making progress toward the achievement of its stated

mission.

In discussing the performance measurement with Program

management, they stated that they had attempted to add the following data

points to their performance measurement in FY 2003: 1) number of profiles

entered into CODIS; 2) number of profiles entered into NDIS; 3) number of

investigations aided; and 4) number of cases solved.

According to documentation provided by Program management, the

OJP’s budget office informed them that they could not make changes to their

performance measures since they had already been entered into the

"Performance Measurement Table" and been approved. However, while

these measurements may have assisted Program management in monitoring

certain Program achievements, these revised performance measurements

still would not generate the type of data (i.e., laboratory capacity prior to

and during the Program) that would allow Program management to track the

Program’s progress toward achieving its mission of increasing laboratory

capacity.

In addition to assessing whether OJP had met the performance

measurement it had established, we assessed whether there were other

performance measurements that could be established that would provide

decision-makers within the Department and Congress information on

whether the Program was meeting its goals and mission. We concluded that

the Program performance measurement does not address whether the

– 19 –

�Program is aiding in reducing the national backlog of no-suspect casework

samples awaiting analysis. While reducing the backlog is not part of the

official mission of the Program, monitoring this information would be useful

in determining whether Program funding is having a positive effect on the

national no-suspect casework backlog, or whether a decrease in the national

no-suspect casework backlog has the beneficial effect of increasing

laboratory capacity across the country.

In a report issued in November 2003, the General Accounting Office

(GAO) cited concerns that performance measurements for many NIJ

programs, including this Program, were inadequate to assess results.26 The

report stated that the Program’s one performance measurement was not

outcome-based; rather, it was merely an intermediate measure. GAO

recommended that the NIJ reassess the measures used to evaluate the

Office of Science and Technology’s progress toward achieving its goals and

focus on outcome measures to better assess results where possible.

Further, in a prior report issued by the Office of the Inspector General (OIG),

deficiencies were noted relating to the adequacy of data being collected by

OJP to monitor performance measurements for another DNA-related

program.27

25

TP

PT

TP

TP

PT

PT

In addition, when we began our audit work in November 2003, we

asked Program officials for the goals and objectives established for the

Program. OJP officials responded that management personnel for the

Program had recently changed, but those officials were unaware of any

formal goals and objectives for the Program. In response to our inquiry, OJP

officials developed the following goals and objectives for the Program:

•

Ensure that state and local forensic casework laboratories receive

funding to reduce their no-suspect case backlogs;

•

Make future awards in a timely manner;

•

Ensure consistency among applicants;

25

Effective July 7, 2004, the General Accounting Office (GAO) became the General

Accountability Office. The acronym remains the same.

TP

PT

26

GAO Report No. 01-198, titled Better Performance Measures Needed to Assess

Results of Justice’s Office of Science and Technology, dated November 2003.

TP

PT

27

The prior OIG audit report, titled The Office of Justice Programs Convicted

Offender DNA Sample Backlog Reduction Grant Program, Report No. 02-20, was issued in

May 2002.

TP

PT

– 20 –

�•

Ensure funding drawdowns meet program and application goals;

•

Provide better award monitoring; and

•

Collect and report accurate statistics and performance measures.

In our judgment, none of these goals and objectives allow OJP to

assess whether the Program is making progress toward achieving its mission

of increasing the capacity of state laboratories to process and analyze

no-suspect DNA from crime scenes. Some examples of such goals and

objectives could include: 1) To increase grantee laboratory capacity by a

certain percentage, and 2) To reduce grantees’ no-suspect backlogs by a

certain percentage.

Recommendations

We recommend that OJP:

1.

Develop and implement procedures that will allow Program officials to

more closely monitor grantee drawdowns as a means to ensure that

adequate progress is being made toward the achievement of each

grantee’s goals and objectives.

2.

Ensure that timely uploads of Program-funded profiles are performed

by all grantees.

3.

Develop Program goals and objectives that support the achievement of

the Program’s mission of increasing laboratory capacity, and

implement a system to track these goals.

4.

Develop performance measurements that allow the monitoring of

progress toward achieving the Program’s mission, such as monitoring

laboratory capacity prior to, during, and at the conclusion of the

Program.

– 21 –

�II.

Administration and Oversight of the Program

We reviewed OJP’s administration and oversight of the Program, and

determined that weaknesses existed in three areas: 1) OJP issued

second-year Program grants to states that had not drawn down any of

their first-year Program grant funds by the time the new awards were

issued; 2) the requirements instituted by the Program for contractor

laboratories performing no-suspect casework analysis were

inconsistent with those required for state and local laboratories

performing no-suspect casework analysis; and 3) OJP failed to ensure

that the federal funds granted under the Program will benefit the

national DNA database. These weaknesses hinder the ability of

Program management to maximize Program accomplishments and

ensure consistent operational quality of laboratories funded for

no-suspect casework analysis.

In August 2001, OJP developed and issued Program requirements in

the Program Solicitation. The Program Solicitation specified general grant

guidelines and restrictions, as well as more specific requirements. Grantees

were required to ensure that all analyses of no-suspect cases under the

Program complied with the QAS, and that any profiles resulting from these

analyses be uploaded expeditiously to CODIS. Further, the grantees were to

ensure that their contracting laboratories:28

TP

PT

•

are accredited by the American Society of Crime Laboratory

Directors/Laboratory Accreditation Board (ASCLD/LAB), or certified by

the National Forensic Science Technology Center (NFSTC);

•

adhere to the most current QAS issued by the FBI Director;

•

have a Technical Leader located onsite at the laboratory;

•

provide quality data that can be easily reviewed and uploaded to

CODIS;

•

have the appropriate resources to screen evidence (if applicable); and

•

only be paid for work that is actually performed.

TP

28

PT

See Appendix III for further information regarding Program specific requirements.

– 22 –

�We reviewed OJP’s administration and oversight of the Program to

determine if grants were made in accordance with applicable legislation, and

whether OJP adequately monitored grantee progress and compliance with

Program requirements. In addition, we assessed whether the Programspecific requirements instituted by OJP fully supported the Program’s

mission. We identified the following weaknesses in OJP’s administration and

oversight of the Program.

Additional Funds Awarded to Grantees not Drawing Down Initial

Funds Timely

In FY 2003, OJP awarded grants for the second year of the Program,

totaling $10.2 million, to six states that had drawn down none of their initial

awards, and to one state (New Mexico) that had drawn down less than

1 percent of its initial award, as of the date the second-year grants were

made. The initial awards to these seven states totaled $11.8 million.

Further, for six of the seven states, the applications requested funding for

purposes that were partially or completely identical to those identified in

their initial award application.29

TABLE 3

FY 2003 Program Awards to States Unable to

Timely Use FY 2001 Grant Funds

09/05/2002

FY 2003

FY 2001

Award Date

Grant

Amount

$5,048,669

09/24/2003

FY 2003

Grant

Amount

$2,072,362

New York

09/20/2002

$5,039,535

09/16/2003

$5,482,020

New Mexico

08/13/2002

$550,245

07/11/2003

$674,414

Oklahoma

08/22/2002

$500,000

07/11/2003

$244,500

New Jersey

08/07/2002

$286,805

06/10/2003

$1,272,254

Nebraska

09/10/2002

$226,494

07/11/2003

$125,086

Connecticut

08/05/2002

$117,163

09/10/2003

$346,758

State

FY 2001

Award Date

Maryland

Total

$11,768,911

29

$10,217,394

We excluded from this analysis states that had begun to draw down more than

trace amounts of their grant funds by the time they were awarded their second-year grant.

– 23 –

�The two largest grantees in the initial award, Maryland and New York,

had not drawn down any of their FY 2001 funds when OJP awarded them

second-year funding. As shown in the table, both states received their

second grant roughly a year after their initial award. Their applications for

the second-year funds requested resources to pay for similar transactions as

were funded in their initial award. For example, Maryland was funded in

FY 2001 for the outsourcing of 3,704 no-suspect cases. Similarly, OJP

awarded it funds for the outsourcing of an additional 500 no-suspect cases in

the FY 2003 award. In New York, three laboratories (Monroe, Nassau

Counties, and the New York State Police) received funding for the

outsourcing of cases in both FY 2001 and FY 2003.

Oklahoma, Connecticut, and New Jersey also had not drawn down any

of their initial awards when they received second-year funding for activities

similar to the first year. We noted that New Jersey, in particular, received a

significant increase in its second-year grant even though it had failed to

establish a pattern of drawing down its first-year Program funds efficiently.

According to application documents, New Jersey requested this increase to

outsource a significantly larger number of no-suspect cases than was

requested in the first year (1,500 no-suspect cases in FY 2003 versus 220 in

FY 2001).

While these states may have legitimate bases for requesting funding

for additional cases, based upon the number of cases in their backlog we

question OJP’s awarding additional funds to states that had failed to

establish a pattern of drawing down their current Program funds in a timely

manner.

We noted that although Nebraska had not drawn down any of its initial

award at the time it received additional Program funding, unlike the previous

states mentioned, Nebraska significantly changed its funding request in its

FY 2003 grant application. The initial award was provided to pay for

personnel and consultant/contractual agreements so the Omaha Police

Department could outsource the analysis of no-suspect cases. The FY 2003

award