Enhance Research and Development in India with Digital Signature Licitness

- Quick to start

- Easy-to-use

- 24/7 support

Simplified document journeys for small teams and individuals

We spread the word about digital transformation

Why choose airSlate SignNow

-

Free 7-day trial. Choose the plan you need and try it risk-free.

-

Honest pricing for full-featured plans. airSlate SignNow offers subscription plans with no overages or hidden fees at renewal.

-

Enterprise-grade security. airSlate SignNow helps you comply with global security standards.

What is the digital signature licitness for research and development in India

The digital signature licitness for research and development in India refers to the legal validity and recognition of digital signatures in the context of research and development activities. This framework ensures that electronic signatures are treated with the same legal standing as traditional handwritten signatures, facilitating smoother transactions and documentation processes. In India, the Information Technology Act of 2000 provides the legal foundation for the use of digital signatures, establishing guidelines for their implementation and enforcement.

How to use the digital signature licitness for research and development in India

Utilizing digital signature licitness in research and development involves several straightforward steps. First, ensure that the digital signature is obtained from a certified authority recognized under the IT Act. Once acquired, users can electronically sign documents related to research proposals, contracts, and agreements. This can be done by uploading the document to a secure platform like airSlate SignNow, where users can fill out necessary fields and apply their eSignature. The signed documents can then be securely shared with relevant parties, streamlining collaboration and compliance.

Steps to complete the digital signature licitness for research and development in India

Completing the digital signature licitness process involves a series of clear steps:

- Obtain a digital signature certificate from a licensed certifying authority.

- Choose a reliable electronic signature platform, such as airSlate SignNow, to manage your documents.

- Upload the document that requires a signature.

- Fill out any necessary fields within the document.

- Apply your digital signature securely.

- Save and share the completed document with relevant stakeholders.

Legal use of the digital signature licitness for research and development in India

The legal use of digital signature licitness for research and development is governed by the Information Technology Act, which outlines the requirements for valid electronic signatures. To ensure compliance, it is essential that the digital signature is created using a secure method and is associated with a verified identity. This legal framework protects the integrity of the signed documents and provides a mechanism for dispute resolution, making it crucial for researchers and developers to understand their rights and responsibilities when using digital signatures.

Security & Compliance Guidelines

When using digital signatures in research and development, adhering to security and compliance guidelines is vital. Users should ensure that the electronic signature platform implements robust security measures, such as encryption and secure access controls. Regular audits and compliance checks should be conducted to ensure that all digital signatures meet legal standards. Additionally, maintaining a clear audit trail of signed documents can help in demonstrating compliance with regulatory requirements and protecting against potential disputes.

Timeframes & Processing Delays

Understanding timeframes and potential processing delays is important when using digital signatures. Typically, the process of signing and sending documents electronically is swift, often completed within minutes. However, delays can occur due to factors such as network issues or the need for additional approvals. It is advisable to plan for these potential delays, especially in time-sensitive research and development projects, to ensure that all stakeholders are aligned and informed throughout the process.

-

Best ROI. Our customers achieve an average 7x ROI within the first six months.

-

Scales with your use cases. From SMBs to mid-market, airSlate SignNow delivers results for businesses of all sizes.

-

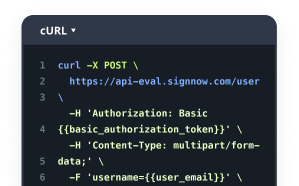

Intuitive UI and API. Sign and send documents from your apps in minutes.

FAQs

-

What is the importance of digital signature licitness for research and development in India?

Digital signature licitness for research and development in India is crucial as it ensures the authenticity and integrity of documents. It helps organizations comply with legal requirements, thereby facilitating smoother project approvals and collaborations. This licitness also enhances trust among stakeholders, which is vital in research and development.

-

How does airSlate SignNow ensure compliance with digital signature licitness for research and development in India?

airSlate SignNow adheres to the regulations set forth by the Information Technology Act of 2000 in India, ensuring that all digital signatures are legally recognized. Our platform employs advanced encryption and security measures to maintain the integrity of signed documents. This compliance guarantees that your research and development processes are both efficient and legally sound.

-

What features does airSlate SignNow offer for managing digital signatures?

airSlate SignNow provides a user-friendly interface for creating, sending, and managing digital signatures. Key features include customizable templates, real-time tracking of document status, and secure storage of signed documents. These features streamline the signing process, making it easier for teams involved in research and development to collaborate effectively.

-

Is airSlate SignNow cost-effective for startups in research and development?

Yes, airSlate SignNow offers competitive pricing plans that cater to startups and small businesses in the research and development sector. Our cost-effective solution allows teams to manage digital signatures without incurring high overhead costs. This affordability makes it an ideal choice for organizations looking to enhance their operational efficiency.

-

Can airSlate SignNow integrate with other tools used in research and development?

Absolutely! airSlate SignNow seamlessly integrates with various tools commonly used in research and development, such as project management software and cloud storage services. This integration allows for a more streamlined workflow, enabling teams to manage documents and signatures without switching between multiple platforms. Such connectivity enhances productivity and collaboration.

-

What are the benefits of using digital signatures in research and development?

Using digital signatures in research and development offers numerous benefits, including enhanced security, faster turnaround times, and reduced paper usage. Digital signature licitness for research and development in India ensures that all signed documents are legally binding, which is essential for compliance. Additionally, it simplifies the approval process, allowing teams to focus on innovation.

-

How secure is the digital signature process with airSlate SignNow?

The digital signature process with airSlate SignNow is highly secure, employing advanced encryption protocols to protect sensitive information. Our platform ensures that all signed documents are stored securely and are accessible only to authorized users. This level of security is vital for maintaining the confidentiality of research and development projects.

Related searches to digital signature licitness for research and development in india

Join over 28 million airSlate SignNow users

Get more for digital signature licitness for research and development in india

- Unlock the power of seamless documentation with ...

- Simplify your workflow with Salesforce document signing ...

- Discover a free PDF editor with signature feature to ...

- Simplify your Microsoft Office PDF signature process

- Download Windows 10 PDF signer for free and streamline ...

- Discover the best PDF editing and signing software for ...

- Streamline your workflow with Microsoft signature ...