eSignature Lawfulness for Animal Science in United Kingdom: Simplify Your Document Processes with airSlate SignNow

- Quick to start

- Easy-to-use

- 24/7 support

Simplified document journeys for small teams and individuals

We spread the word about digital transformation

Why choose airSlate SignNow

-

Free 7-day trial. Choose the plan you need and try it risk-free.

-

Honest pricing for full-featured plans. airSlate SignNow offers subscription plans with no overages or hidden fees at renewal.

-

Enterprise-grade security. airSlate SignNow helps you comply with global security standards.

Your complete how-to guide - esignature lawfulness for animal science in united kingdom

eSignature lawfulness for Animal science in United Kingdom

In the United Kingdom, eSignature lawfulness is crucial for businesses in the realm of Animal science. To streamline your document signing process, airSlate SignNow offers a user-friendly and cost-effective solution. The service empowers businesses to send and eSign documents efficiently.

How to Use airSlate SignNow for Easy Document Signing:

- Launch the airSlate SignNow web page in your browser.

- Sign up for a free trial or log in.

- Upload a document you want to sign or send for signing.

- If you're going to reuse your document later, turn it into a template.

- Open your file and make edits: add fillable fields or insert information.

- Sign your document and add signature fields for the recipients.

- Click Continue to set up and send an eSignature invite.

airSlate SignNow provides a great return on investment with its rich feature set. It is easy to use and scale, making it ideal for small to medium-sized businesses. Additionally, the service offers transparent pricing with no hidden support fees and provides superior 24/7 support for all paid plans.

Experience the benefits of using airSlate SignNow for your document signing needs and streamline your workflow today!

How it works

Rate your experience

What is the esignature lawfulness for animal science in united kingdom

The esignature lawfulness for animal science in the United Kingdom refers to the legal framework that governs the use of electronic signatures within the context of animal science documentation. This includes agreements, consent forms, and various regulatory submissions related to animal health, welfare, and research. Under UK law, electronic signatures are recognized as valid and enforceable, provided they meet certain criteria outlined in the Electronic Communications Act 2000 and the eIDAS Regulation. This law ensures that electronic signatures can be used securely and effectively in the animal science sector, facilitating efficient workflows and compliance with legal requirements.

How to use the esignature lawfulness for animal science in united kingdom

Utilizing the esignature lawfulness for animal science involves several key steps. First, ensure that the document you are working with is suitable for electronic signing. Next, upload the document to an electronic signature platform like airSlate SignNow. You can then fill in any required fields, such as names and dates. After completing the document, you can send it for signature to other parties involved. Each signer will receive a notification, allowing them to review and eSign the document securely. Once all signatures are collected, the finalized document can be stored electronically, ensuring compliance and easy access for future reference.

Steps to complete the esignature lawfulness for animal science in united kingdom

To complete the esignature lawfulness process for animal science documents, follow these steps:

- Choose the document that requires signatures.

- Upload the document to airSlate SignNow or a similar platform.

- Fill in any necessary information, such as the names of signers and relevant dates.

- Send the document to the designated signers for their electronic signatures.

- Monitor the signing progress through the platform's dashboard.

- Once all signatures are obtained, download and securely store the completed document.

Legal use of the esignature lawfulness for animal science in united kingdom

The legal use of esignatures in the animal science field is governed by specific regulations that ensure their validity. In the UK, electronic signatures are considered legally binding as long as they demonstrate the intent of the signer to authenticate the document. This includes ensuring that the signature process is secure and that the signer's identity can be verified. Compliance with data protection laws is also essential, as sensitive information may be involved in animal science documentation. By adhering to these legal frameworks, organizations can confidently use electronic signatures in their workflows.

Security & Compliance Guidelines

When using electronic signatures in animal science, it is crucial to follow security and compliance guidelines to protect sensitive information. Key measures include:

- Utilizing secure platforms that comply with data protection regulations.

- Implementing multi-factor authentication for signers to verify their identity.

- Ensuring that documents are encrypted during transmission and storage.

- Maintaining an audit trail of all signing activities for accountability.

By adhering to these guidelines, organizations can mitigate risks and ensure the integrity of their electronic signing processes.

Documents You Can Sign

In the context of animal science, various types of documents can be signed electronically. These include:

- Research consent forms.

- Animal health and welfare agreements.

- Regulatory submissions and reports.

- Contracts with suppliers or partners.

- Internal policy documents.

Using electronic signatures for these documents streamlines processes and enhances collaboration among stakeholders.

-

Best ROI. Our customers achieve an average 7x ROI within the first six months.

-

Scales with your use cases. From SMBs to mid-market, airSlate SignNow delivers results for businesses of all sizes.

-

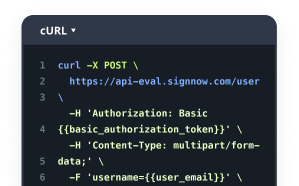

Intuitive UI and API. Sign and send documents from your apps in minutes.

FAQs

-

What is the esignature lawfulness for animal science in the United Kingdom?

The esignature lawfulness for animal science in the United Kingdom is governed by the Electronic Communications Act 2000 and the eIDAS Regulation. These laws recognize electronic signatures as legally binding, provided they meet certain criteria. This ensures that documents related to animal science can be signed electronically without compromising their legal validity.

-

How does airSlate SignNow ensure compliance with esignature lawfulness for animal science in the United Kingdom?

airSlate SignNow complies with the esignature lawfulness for animal science in the United Kingdom by adhering to the regulations set forth in the eIDAS framework. Our platform provides secure and verifiable electronic signatures that meet legal standards. This allows users in the animal science sector to confidently sign documents electronically.

-

What features does airSlate SignNow offer for managing documents in animal science?

airSlate SignNow offers a range of features tailored for the animal science industry, including customizable templates, real-time tracking, and secure storage. These features enhance the efficiency of document management while ensuring compliance with esignature lawfulness for animal science in the United Kingdom. Users can streamline their workflows and reduce paperwork signNowly.

-

Is airSlate SignNow cost-effective for businesses in the animal science sector?

Yes, airSlate SignNow is designed to be a cost-effective solution for businesses in the animal science sector. Our pricing plans are flexible and cater to various organizational needs, ensuring that you can find a plan that fits your budget. By utilizing our platform, you can save on printing and mailing costs while maintaining compliance with esignature lawfulness for animal science in the United Kingdom.

-

Can airSlate SignNow integrate with other tools used in animal science?

Absolutely! airSlate SignNow offers seamless integrations with various tools commonly used in the animal science field, such as CRM systems and project management software. This enhances your workflow and ensures that all documents remain compliant with esignature lawfulness for animal science in the United Kingdom. Integration simplifies the signing process and improves overall efficiency.

-

What are the benefits of using airSlate SignNow for electronic signatures in animal science?

Using airSlate SignNow for electronic signatures in animal science provides numerous benefits, including increased efficiency, reduced turnaround times, and enhanced security. Our platform ensures that all signatures are legally binding under the esignature lawfulness for animal science in the United Kingdom. This allows professionals to focus on their core activities while ensuring compliance and security.

-

How secure is airSlate SignNow for handling sensitive documents in animal science?

airSlate SignNow prioritizes security by employing advanced encryption and authentication measures to protect sensitive documents in the animal science sector. Our platform is compliant with industry standards, ensuring that all electronic signatures are secure and legally valid under the esignature lawfulness for animal science in the United Kingdom. You can trust us to safeguard your important information.

Related searches to esignature lawfulness for animal science in united kingdom

Join over 28 million airSlate SignNow users

Get more for esignature lawfulness for animal science in united kingdom

- Create a digital signature in Word effortlessly

- Create your signature effortlessly with our name sign ...

- Discover the best mobile signing tool for your business

- Create your free email signature tool for Gmail today

- Create signature online jpg effortlessly with airSlate ...

- Create your perfect mail signature style with airSlate ...

- Explore innovative email signature styles with airSlate ...

- Enhance your workflow with our signature production app