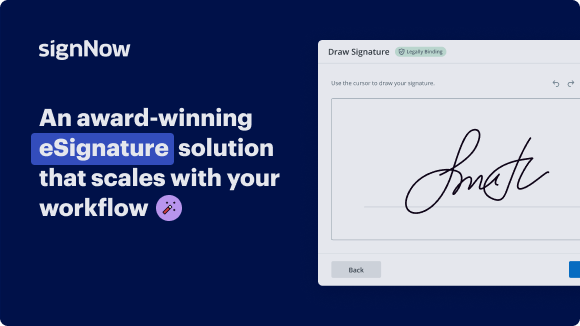

Collaborate on Account Invoice for Engineering with Ease Using airSlate SignNow

See how it works!Click here to sign a sample doc

See airSlate SignNow eSignatures in action

be ready to get more

Choose a better solution

Move your business forward with the airSlate SignNow eSignature solution

Add your legally binding signature

Create your signature in seconds on any desktop computer or mobile device, even while offline. Type, draw, or upload an image of your signature.

Integrate via API

Deliver a seamless eSignature experience from any website, CRM, or custom app — anywhere and anytime.

Send conditional documents

Organize multiple documents in groups and automatically route them for recipients in a role-based order.

Share documents via an invite link

Collect signatures faster by sharing your documents with multiple recipients via a link — no need to add recipient email addresses.

Save time with reusable templates

Create unlimited templates of your most-used documents. Make your templates easy to complete by adding customizable fillable fields.

Improve team collaboration

Create teams within airSlate SignNow to securely collaborate on documents and templates. Send the approved version to every signer.

Our user reviews speak for themselves

Collect signatures

24x

faster

Reduce costs by

$30

per document

Save up to

40h

per employee / month

airSlate SignNow solutions for better efficiency

Keep contracts protected

Enhance your document security and keep contracts safe from unauthorized access with dual-factor authentication options. Ask your recipients to prove their identity before opening a contract to account invoice for engineering.

Stay mobile while eSigning

Install the airSlate SignNow app on your iOS or Android device and close deals from anywhere, 24/7. Work with forms and contracts even offline and account invoice for engineering later when your internet connection is restored.

Integrate eSignatures into your business apps

Incorporate airSlate SignNow into your business applications to quickly account invoice for engineering without switching between windows and tabs. Benefit from airSlate SignNow integrations to save time and effort while eSigning forms in just a few clicks.

Generate fillable forms with smart fields

Update any document with fillable fields, make them required or optional, or add conditions for them to appear. Make sure signers complete your form correctly by assigning roles to fields.

Close deals and get paid promptly

Collect documents from clients and partners in minutes instead of weeks. Ask your signers to account invoice for engineering and include a charge request field to your sample to automatically collect payments during the contract signing.

be ready to get more

Why choose airSlate SignNow

-

Free 7-day trial. Choose the plan you need and try it risk-free.

-

Honest pricing for full-featured plans. airSlate SignNow offers subscription plans with no overages or hidden fees at renewal.

-

Enterprise-grade security. airSlate SignNow helps you comply with global security standards.

Discover how to streamline your process on the account invoice for Engineering with airSlate SignNow.

Seeking a way to streamline your invoicing process? Look no further, and adhere to these quick guidelines to effortlessly work together on the account invoice for Engineering or ask for signatures on it with our user-friendly platform:

- Сreate an account starting a free trial and log in with your email credentials.

- Upload a file up to 10MB you need to eSign from your PC or the cloud.

- Proceed by opening your uploaded invoice in the editor.

- Perform all the necessary actions with the file using the tools from the toolbar.

- Click on Save and Close to keep all the modifications made.

- Send or share your file for signing with all the necessary addressees.

Looks like the account invoice for Engineering process has just turned easier! With airSlate SignNow’s user-friendly platform, you can easily upload and send invoices for electronic signatures. No more producing a hard copy, signing by hand, and scanning. Start our platform’s free trial and it simplifies the whole process for you.

How it works

Access the cloud from any device and upload a file

Edit & eSign it remotely

Forward the executed form to your recipient

be ready to get more

Get legally-binding signatures now!

FAQs

-

What is an account invoice for engineering?

An account invoice for engineering is a detailed document that outlines the services provided by engineering firms, including costs associated with specific projects. It is essential for tracking project expenses and ensuring timely payments. Using airSlate SignNow can streamline the process of managing these invoices effectively. -

How can airSlate SignNow help with managing account invoices for engineering?

airSlate SignNow enables engineering firms to create, send, and eSign account invoices seamlessly. This reduces the risk of errors and speeds up the invoicing process, ensuring that you receive payments faster. With our user-friendly interface, managing account invoices has never been easier. -

What are the pricing options for airSlate SignNow for engineering firms?

airSlate SignNow offers various pricing plans designed to fit the needs of engineering firms of all sizes. Our pricing is competitive and includes features tailored to managing account invoices for engineering. You can choose a plan that best suits your business requirements and budget. -

Are there bulk invoicing options available for engineering firms?

Yes, airSlate SignNow provides bulk invoicing options that are perfect for engineering firms handling multiple projects. This feature allows you to create and send multiple account invoices for engineering efficiently. Streamlining this process saves time and increases productivity. -

Can I integrate airSlate SignNow with other accounting software?

Absolutely! airSlate SignNow offers integrations with various accounting software, making it easy to sync your account invoices for engineering with your existing systems. This seamless integration helps you maintain accurate financial records and manage your invoices more effectively. -

What features does airSlate SignNow offer for processing account invoices for engineering?

airSlate SignNow comes equipped with features such as customizable templates, digital signatures, and automation workflows specifically for account invoices for engineering. These features simplify the invoicing process, allowing you to focus more on your engineering projects and less on paperwork. -

Is there a free trial available for airSlate SignNow?

Yes, airSlate SignNow offers a free trial for you to explore our platform and see how it can assist you in managing your account invoices for engineering. This trial lets you experience our features firsthand, helping you determine if it's the right solution for your needs before making a commitment.

What active users are saying — account invoice for engineering

Get more for account invoice for engineering

- Graphic designer invoice for Product quality

- Create Graphic Designer Invoice for Inventory

- Graphic designer invoice for Security

- Create Graphic Designer Invoice for R&D

- Graphic designer invoice for Personnel

- Modern Invoice Template for Facilities

- Modern invoice template for Finance

- Modern Invoice Template for IT

Find out other account invoice for engineering

- Easily add signature to PDF without Acrobat for ...

- Discover free methods to sign a PDF document online ...

- How to add electronic signature to PDF on iPhone with ...

- How to sign PDF files electronically on Windows with ...

- How to sign a PDF file on phone with airSlate SignNow

- Experience seamless signing with the iPhone app for ...

- Easily sign PDF without Acrobat for seamless document ...

- Easily email a document with a signature using airSlate ...

- How to sign a document online and email it with ...

- How to use digital signature certificate on PDF ...

- How to use e-signature in Acrobat for effortless ...

- How to use digital signature on MacBook with airSlate ...

- Discover effective methods to sign a PDF online with ...

- Effortlessly sign PDFs with the linux pdf sign command

- Easily sign PDF documents on Windows with airSlate ...

- Easily sign a PDF file and email it back with airSlate ...

- Effortlessly sign PDF documents on phone

- Sign PDF document with certificate effortlessly

- Easily signing a PDF document on my iPhone

- Sign PDF online with electronic signature easily and ...